Transforming the Future of Technology | Data & Analytics Maestro, Intelligent Application, Cybersecurity Innovator and Cloud Champion

I'm a trailblazer in the tech world, celebrated for my forward-thinking approach in Data Modernization, GenAI, Intelligent Applications, Cybersecurity, and Cloud Transformations. As a Technology Strategist, coach & Mentor and Microsoft MVP, I've dedicated my career to revolutionizing how we interact with and leverage technology, consistently staying ahead of the curve.

Leadership Through Innovation

Currently steering the ship at Onix as the Senior Vice President, Global Lead - Data & Analytics and Intelligent Apps Practice, I specialize in pioneering solutions in Data Modernization, Data Governance, Advanced Analytics and AI/ML.

Earlier my tenure at Avanade was marked by the successful creation of a $550 million Security Services practice, focusing on comprehensive cybersecurity.

A Track Record of Excellence

- Developed Avanade's 'Everything on Azure' initiative, generating an impressive $310 million portfolio.

- Established $520M Cloud and Modern Application business for Avanade (APAC and LATAM)

- Key player at Microsoft in developing a $1 billion Azure consumption business, leading a global team of Cloud Solution Architects.

Accolades and Industry Impact

My journey is peppered with significant revenue boosts and industry recognition, stemming from a deep understanding of technology paired with strategic business acumen.

Lifelong Learning and Expertise

I pride myself on my commitment to continuous learning, holding an array of certifications such as Microsoft Certified Architect Solutions and Databases, TOGAF 9, AWS Solutions Architect – Professional, among others.

My Core Specializations

- Championing Data Modernization, Data Governance, AI/ML solution for Data Driven Business Transformation

- Cyber Resilience and spearheading Digital Evolution.

- Championing Cyber Resilience and spearheading Digital Evolution.

- Creating groundbreaking Solution offerings and Go-to-Market strategies.

- Cultivating scalable practices and nurturing high-performance teams.

- Rich industry experience spanning ITES, FSI, Retail, Public Sector, and Oil & Gas.

- Deep expertise in Azure, GCP, AWS, and AI/ML.

I believe in the power of looking forward and embracing technological challenges as opportunities. My mission is to lead at the vanguard of the tech industry, shaping solutions that not only meet current needs but also anticipate future trends.

Available For: Authoring, Consulting, Influencing, Speaking

Travels From: Seattle

Speaking Topics: Cybersecurity, Cloud Transformation, Application Modernization Digital Transformation, Technology Leadership, 5G, Microsoft Azure

| Gaurav Agarwaal | Points |

|---|---|

| Academic | 0 |

| Author | 85 |

| Influencer | 249 |

| Speaker | 0 |

| Entrepreneur | 30 |

| Total | 364 |

Points based upon Thinkers360 patent-pending algorithm.

AI Governance In Tax Technology: The New Mandate For Trust And Transparency

AI Governance In Tax Technology: The New Mandate For Trust And Transparency

Tags: Cloud, Digital Transformation, Leadership

From Back Office To Brain Trust: Why GCCs Need To Become AI Innovation Engines

From Back Office To Brain Trust: Why GCCs Need To Become AI Innovation Engines

Tags: Cloud, Digital Transformation, Leadership

Future-Proofing Data Strategy: Best Practices for Modernization to Lakehouse Architecture

Future-Proofing Data Strategy: Best Practices for Modernization to Lakehouse Architecture

Tags: Cloud, Cybersecurity, Digital Transformation

Global Capability Centers (GCCs) at an Inflection Point — AI-First or Obsolete?

Global Capability Centers (GCCs) at an Inflection Point — AI-First or Obsolete?

Tags: Cloud, Cybersecurity, Digital Transformation

Working at the Speed of Trust: The Leadership Imperative

Working at the Speed of Trust: The Leadership Imperative

Tags: Agile, Leadership, Management

Microsoft Fabric: The Future of Unified Data Intelligence

Microsoft Fabric: The Future of Unified Data Intelligence

Tags: Cloud, Digital Transformation, Leadership

AI Agents leading Agentic Economy: The Rise of Autonomous Innovation

AI Agents leading Agentic Economy: The Rise of Autonomous Innovation

Tags: Cloud, Cybersecurity, Digital Transformation

Adaptive Identity : Redefining Trust in a Hyper-Connected World

Adaptive Identity : Redefining Trust in a Hyper-Connected World

Tags: Cloud, Cybersecurity, Digital Transformation

The Strategic Imperative for Data-AI Convergence

The Strategic Imperative for Data-AI Convergence

Tags: Cloud, Cybersecurity, Digital Transformation

Data Observability: Building Resilient and Transparent Data Ecosystems

Data Observability: Building Resilient and Transparent Data Ecosystems

Tags: Cloud, Cybersecurity, Digital Transformation

From Technocrat to Business Leader: The CISO’s Strategic Transformation

From Technocrat to Business Leader: The CISO’s Strategic Transformation

Tags: Cloud, Cybersecurity, Digital Transformation

Tags: Cloud, Cybersecurity, Digital Transformation

Redefining Data Modernization: The Path to a Cloud-First, Data-Driven Enterprise

Redefining Data Modernization: The Path to a Cloud-First, Data-Driven Enterprise

Tags: Cloud, Cybersecurity, Digital Transformation

Harnessing GenAI: Building Cyber Resilience Against Offensive AI

Harnessing GenAI: Building Cyber Resilience Against Offensive AI

Tags: Cloud, Cybersecurity, Digital Transformation

Seven Years, Five Continents, Global Impact, One Journey: Unveiling 24 Pearls of My Executive…

Seven Years, Five Continents, Global Impact, One Journey: Unveiling 24 Pearls of My Executive…

Tags: Cloud, Cybersecurity, Digital Transformation

Seven Years, Five Continents, Global Impact, One Journey: Unveiling 24 Pearls of My Executive Odyssey

Seven Years, Five Continents, Global Impact, One Journey: Unveiling 24 Pearls of My Executive Odyssey

Tags: Diversity and Inclusion

Generative AI in the Crosshairs: CISOs' Battle for Cybersecurity

Generative AI in the Crosshairs: CISOs' Battle for Cybersecurity

Tags: Generative AI

Generative AI in the Crosshairs: CISOs’ Battle for Cybersecurity

Generative AI in the Crosshairs: CISOs’ Battle for Cybersecurity

Tags: Cloud, Cybersecurity, Digital Transformation

Tags: AI, Generative AI

Securing Tomorrow: Unleashing the Power of Breach and Attack Simulation (BAS) Technology

Securing Tomorrow: Unleashing the Power of Breach and Attack Simulation (BAS) Technology

Tags: Cloud, Cybersecurity, Digital Transformation

Decoding new SEC Regulations: A CISO’s Guide to DOs and DON’Ts in Cybersecurity

Decoding new SEC Regulations: A CISO’s Guide to DOs and DON’Ts in Cybersecurity

Tags: Cloud, Cybersecurity, Digital Transformation

Securing Tomorrow: Unleashing the Power of Breach and Attack Simulation (BAS) Technology

Securing Tomorrow: Unleashing the Power of Breach and Attack Simulation (BAS) Technology

Tags: Cybersecurity, Security, IoT

Decoding new SEC Regulations: A CISO's Guide to DOs and DON'Ts in Cybersecurity

Decoding new SEC Regulations: A CISO's Guide to DOs and DON'Ts in Cybersecurity

Tags: Cybersecurity

12 Secrets for Successful Digital Transformation

12 Secrets for Successful Digital Transformation

Tags: Digital Transformation, Leadership

Letter from My Desk #15: 4 Managerial Archetypes that employees encounter in their career

Letter from My Desk #15: 4 Managerial Archetypes that employees encounter in their career

Tags: Digital Transformation, Leadership

Global Capability Centers (GCCs) at an Inflection Point – AI-First or Obsolete?

Global Capability Centers (GCCs) at an Inflection Point – AI-First or Obsolete?

Tags: Cloud, Digital Transformation, Leadership

The Next Evolution in Data Analytics: Navigating Trust, Security, and AI Governance

The Next Evolution in Data Analytics: Navigating Trust, Security, and AI Governance

Tags: Cloud, Digital Transformation, Leadership

Thriving in the Era of Data Dominance: 2025 Trends Revealed

Thriving in the Era of Data Dominance: 2025 Trends Revealed

Tags: Cloud, Digital Transformation, Leadership

AI Agents leading Agentic Economy: The Rise of Autonomous Innovation

AI Agents leading Agentic Economy: The Rise of Autonomous Innovation

Tags: Cloud, Digital Transformation, Leadership

Adaptive Identity : Redefining Trust in a Hyper-Connected World

Adaptive Identity : Redefining Trust in a Hyper-Connected World

Tags: Cloud, Digital Transformation, Leadership

The Strategic Imperative for Data-AI Convergence

The Strategic Imperative for Data-AI Convergence

Tags: Cloud, Digital Transformation, Leadership

Data Observability: Building Resilient and Transparent Data Ecosystems

Data Observability: Building Resilient and Transparent Data Ecosystems

Tags: Cloud, Digital Transformation, Leadership

From Technocrat to Business Leader: The CISO’s Strategic Transformation

From Technocrat to Business Leader: The CISO’s Strategic Transformation

Tags: Cloud, Digital Transformation, Leadership

Guardian of the Future : The Strategic Imperative for Securing AI Systems

Guardian of the Future : The Strategic Imperative for Securing AI Systems

Tags: Cloud, Digital Transformation, Leadership

Redefining Data Modernization: The Path to a Cloud-First, Data-Driven Enterprise

Redefining Data Modernization: The Path to a Cloud-First, Data-Driven Enterprise

Tags: Cloud, Digital Transformation, Leadership

Decoding new SEC Regulations: A CISO's Guide to DOs and DON'Ts in Cybersecurity

Decoding new SEC Regulations: A CISO's Guide to DOs and DON'Ts in Cybersecurity

Tags: Cloud, Digital Transformation, Leadership

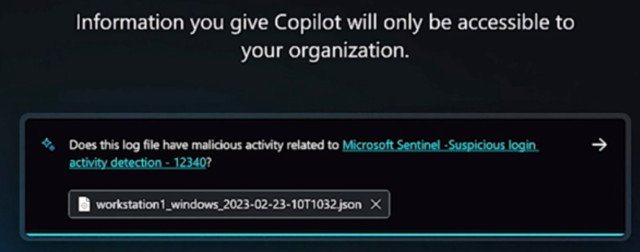

Introduction to Microsoft Security Copilot

Introduction to Microsoft Security Copilot

Tags: Cloud, Digital Transformation, Leadership

Forbes Technology Council

Forbes Technology Council

Tags: Cloud, Digital Transformation, Leadership

Securing Tomorrow: Unleashing the Power of Breach and Attack Simulation (BAS) Technology

Securing Tomorrow: Unleashing the Power of Breach and Attack Simulation (BAS) Technology

As the cybersecurity landscape continues to evolve, the challenges associated with defending

against cyber threats have grown exponentially. Threat vectors have expanded, and cyber

attackers now employ increasingly sophisticated tools and methods. Moreover, the

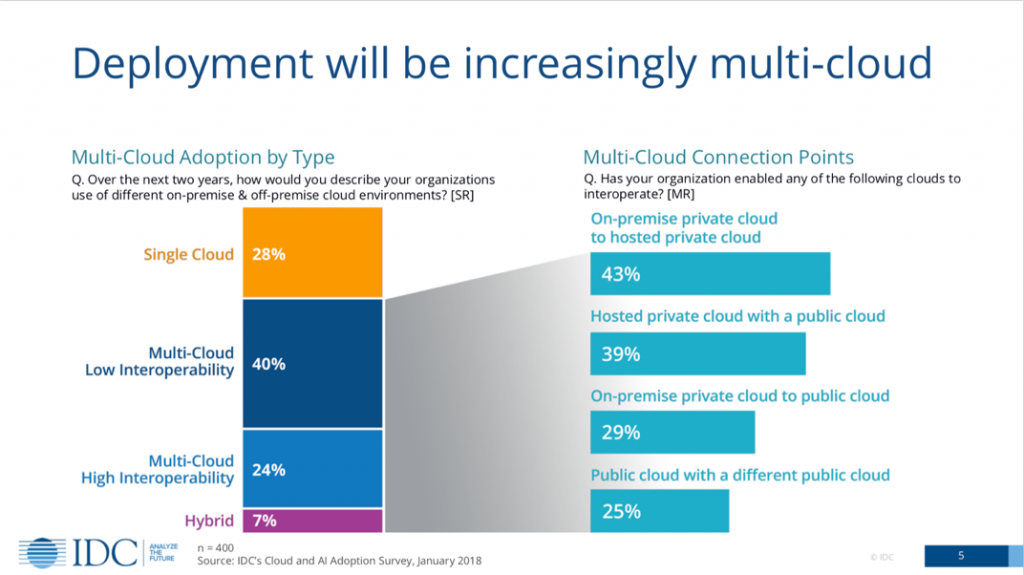

complexity of managing security in today's distributed hybrid/multi-cloud architecture,

heavily reliant on high-speed connectivity for both people and IoT devices, further

compounds the challenges of #cyberdefense.

One of the foremost concerns for corporate executives and boards of directors is

the demonstrable effectiveness of cybersecurity investments. However, quantifying and

justifying the appropriate level of spending remains a formidable obstacle for most enterprise

security teams. Securing additional budget allocations to bolster an already robust security

posture becomes particularly challenging in the face of a rising number of #cyberbreaches,

which have inflicted substantial reputational and financial harm on companies across diverse

industries.

The modern enterprise's IT infrastructure is an intricate web of dynamic networks,

cloud resources, an array of software applications, and a multitude of endpoint

devices. These enterprise IT ecosystems are vast and intricate, featuring a myriad of network

solutions, a diverse array of endpoint devices, and a mix of Windows and Linux servers.

Additionally, you'll find desktops and laptops running various versions of both Windows and

macOS dispersed throughout this intricate landscape. Each component within this

architecture boasts its own set of #securitycontrols, making the enterprise susceptible to

#cyberthreats due to even the slightest misconfiguration or a shift towards less secure

settings.

In this environment, a simple misconfiguration, or even a minor deviation towards less

secure configurations, can provide attackers with the foothold they need to breach an

organization's infrastructure, networks, devices, and software. It underscores the critical

importance of maintaining a vigilant and proactive approach to cybersecurity in this everevolving digital era.

As organizations look for ways to demonstrate the effectiveness of their security spend and

the policies and procedures put in place to remediate and respond to security

threats, vulnerability testing can be an important component of a security team’s

vulnerability management activities. There are several testing approaches that

organizations use as part of their vulnerability management practices. Four of the most

common are listed below:

• Penetration testing: is a common testing approach that Enterprises employ to

uncover vulnerabilities in their infrastructure. A Pen test involves highly skilled

security experts using tools and attack methods employed by actual attackers to

achieve a specific pre-defined breach objective. The pen test covers networks,

applications, and endpoint devices.

• Red Teaming: A red team performs “ethical hacking” by imitating advanced threat

actors to test an organization's cyber defenses. They employ stealthy techniques to

identify security gaps, offering valuable insights to enhance defenses. The results

from a red-teaming exercise help identify needed improvements in security controls.

• Blue Teaming: is an internal security team that actively defend against real attackers

and respond to red team activities. Blue Teams should be distinguished from standard

security teams because of the mission to provide constant and continuous cyber

defense against all forms of cyber-attacks.

• Purple Teaming: The objective of purple teams is to align red and blue team efforts.

By leveraging insights from both sides, they provide a comprehensive understanding

of cyber threats, prioritize vulnerabilities, and offer a realistic APT (Advanced

Persistent Threat) experience to improve overall security.

Although these vulnerability testing approaches are commonly used by organizations,

there are several challenges associated with them:

• These approaches are highly manual and resource intensive, which for many

organizations translates to high cost and a lack of skilled in-house resources to

perform these tests.

• The outcome of these vulnerability tests provides vital information back to the

organization to act on, they are performed infrequently due largely to the cost and

lack of skilled resources mentioned previously.

• These methods provide a point-in-time view of an organization’s security

posture which is becoming less effective for companies moving to a more dynamic

cloud-based IT architecture with an increasing diversity of endpoints and applications.

Traditional vulnerability testing approaches yield very little value because the security

landscape and enterprise IT architectures are dynamic and constantly changing.

Since testing the cybersecurity posture of organizations is becoming a top priority, it

triggered an increased demand for the latest and most comprehensive testing

solutions. Moreover, it’s almost impossible, from a practical standpoint, for multiple

enterprise security teams to manually coordinate their work and optimize configurations for

all the overlapping systems. Different teams have their own management tasks, mandates,

and security concerns. Additionally, performing constant optimizations and manual testing

imposes a heavy burden on already short-staffed security teams. This is why security teams

are turning to Breach and Attack Simulation (BAS) to mitigate constantly emerging (and

mostly self-inflicted) security weaknesses.

Definition - Breach and Attack Simulation (BAS)

Gartner defines, Breach and Attack Simulation (BAS) technologies as tools “that allow

enterprises to continually and consistently simulate the full attack cycle (including

insider threats, lateral movement and data exfiltration) against enterprise

infrastructure, using software agents, virtual machines and other means.”

BAS tools replicate real-world cyber attacker tactics, techniques, and procedures

(TTPs). They assist organizations in proactively identifying vulnerabilities, evaluating

security controls, and improving incident response readiness. By simulating these attacks in a

controlled environment, organizations gain valuable insights into security weaknesses,

enabling proactive measures to strengthen overall #cybersecurity.

BAS automates the testing of threat vectors, including external and insider threats, lateral

movement, and data exfiltration. While it complements red teaming and penetration testing,

BAS cannot entirely replace them. It validates an organization's security posture by testing its

ability to detect a range of simulated attacks using SaaS platforms, software agents, and

virtual machines.

Most BAS solutions operate seamlessly on LAN networks without disrupting critical

business operations. They produce detailed reports highlighting security gaps and prioritize

remediation efforts based on risk levels. Typical users of BAS technologies include financial

institutions, insurance companies, and various other industries.

BAS Primary Functions

Typical BAS offerings encompass much of what traditional vulnerability testing includes, it

differs in a very critical way. At a high level, BAS primary functions are as follows:

• Attack (mimic / simulate real threats)

• Visualize (clear picture of threat and exposures)

• Prioritize (assign a severity or criticality rating to exploitable vulnerabilities)

• Remediate (mitigate / address gaps

Tags: Cybersecurity

Digital Resilience Requires Changes In The Taxonomy Of Business IT Systems

Digital Resilience Requires Changes In The Taxonomy Of Business IT Systems

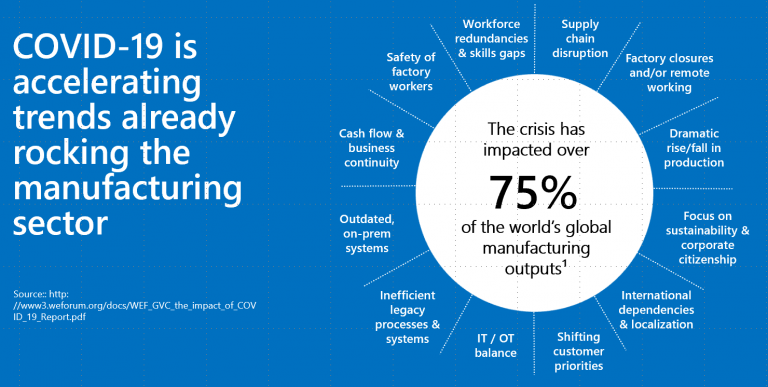

We are living in a hyper-digitally dynamic ecosystem. As we are moving towards a digitally dependent future, the need for Digital Resilience is increasing rapidly.

Digital Resilience helps companies by providing several ways for businesses to use digital tools and systems to recover from crises quickly. Today, digital resilience and supply chain resilience no longer imply merely the ability to manage risk. It now means that managing risk means being better positioned than competitors to deal with disruptions and even gain an advantage from them.

The need for Digital Resilience and sustainable supply chains has undoubtedly brought about a change in business IT taxonomy, enhancing business processes and performance.

In this article, I intend to highlight these very transformational changes in IT systems that have happened over two decades and new IT systems that enterprises need to develop and transform to become more successful, productive, and efficient.

Systems of Records (SoR) are software solutions that serve as the backbone for business processes.

The power of SoR is that they are the ultimate source and therefore “record” of critical business data. Essentially, SoR can be understood as a data storage and retrieval system for a company that works as an authoritative data source for the entire organization.

Systems of Records (SoR) are valuable to a company as it becomes a single source of truth that provides essential insights and information to a company’s management teams.

Initially, SoR’s were similar to the enterprise resource planning (ERP) systems, when on-premises ERP’s (like from Oracle, SAP) were in full use. However, over time, companies started to realize the time and cost inefficiencies of incorporating ERP. It required dedicated IT teams to set it up and had just had a sub-par user interface.

During the last decade, SoR took a turn by incorporating SaaS-powered software lock-in tools such as Workday, SuccessFactors, Salesforce, Dynamics 365, which have proved to be a little more efficient than the previous ones. SoR is critical for companies to mandate data integrity.

Systems of Engagement (SoE) was introduced to help employees, customers, and partner ecosystems to engage with the Systems of Records (SoR), data, and process flow better. SoE are systems that essentially collect data and enable customers, employees, and partners to interact with the business and associated methodology. They are task-based systems or tools used to retrieve specific information and data. Enterprises are incorporating SoE, not for their software, but for introducing new, data-driven processes to talent acquisition and talent management and business processes for operational excellence.

Organizations see changes in their internal management systems and are now moving away from top-down management to creating a more agile self-management system. Enterprises usually integrated Systems of Records (SoR) and Systems of Engagement (SoE) for better efficiency. Stand-alone traditional ERPs were becoming more and more expensive to handle and couldn’t keep up with the faced-paced digital transformation and innovation. Hence, by integrating SoE (a two-tier approach), organizations could make their business operations much more agile and cheaper.

The idea is simple:

Systems of Engagement are placed to “engage customers or engage with customers” and are supposed to be designed for flexibility and scalability. In contrast, Systems of Records (ERP, HRMS, ITSM, etc.) are just data repositories.

As innovation accelerated and businesses started automating and utilizing analytical tools in their operations, the need for different systems grew. As data-driven companies began to grow, the necessity for high-quality data and data modeling tools grew to gain actionable insights to make better business decisions. Hence, IT systems that support decision-making started to include Systems of Innovation and Intelligence (SoII) into their operations.

Systems of Innovation and Intelligence (SoII) gathers data from Systems of Record (SoR) and Systems of Engagement (SoE) and derives insights. It analyses the accumulated data and suggests improvements to enhance business performance and decisions. Earlier in businesses, SoR and SoE were examined and observed separately. However, now, SoII converges these data to derive better insights that can be turned to create better business outcomes or to innovate new products.

According to Forrester’s research, businesses are “drowning in data” and failing to gather insights. Big data, agile business intelligence, data analytics, etc., surely do give enterprises insight. However, they solve the problem partially. The solution lies in companies employing a structured system that harnesses the actual value from gathered data. The answer lies in embedding “closed looped systems” or nothing but the Systems of Innovation and Intelligence (SoII).

With SoII, enterprises can perform different types of analysis on collected data through predictive analytics, descriptive analytics, cognitive analytics, etc. Although enterprises have incorporated BI and analytics to gather insight, it won’t be enough without proper systems that convert these insights into actions. It is crucial to test what works, what doesn’t and understand the required changes. With data, you need to act intelligently.

To be successful in the new normal world, it is crucial to adapt to the fast-paced digital transformation and take a holistic approach to enhance business agility and performance. Here’s what needs to change going forward, in my opinion:

Systems of Records (SoR) should modernize with a cloud-first approach to transform them into Systems of Records and Intelligence.

Modernizing an existing SoR to Systems of Records and Intelligence will require a view of the workloads from an underlying infrastructure perspective and core application architecture characteristics to determine its suitability to operate on the cloud. But a cloud-first approach will leverage a rich ecosystem of services from the cloud marketplace, enabling rapid development of SOE applications

Systems of Engagement should now transition and offer different experiences for employees and customers, at least from a perspective of:

It is essential to know whether employees/customers can seamlessly adapt to these or not. For example, employees of different generations (Gen Z to Gen X) must engage with varying factors of form and experience mediums. They must offer different experiences to employees, and this can happen by refining your Systems of Engagements to a new System of Engagement and Experience.

Systems of Innovation (SoI) should now define the enterprises’ future. They need to focus more on establishing a System of Innovation (SoI) with a cloud-first mindset. SoI should enable fast-paced innovation for developing newly Sustainable and Resilient Products and Services.

Enterprises need to ensure they leverage Data as Assets and implement Systems of Insights to support Data-Driven Decision making across the entire Supply Chain and the broad spectrum of business processes and functions. Today enterprises are under immense pressure from Regulatory Authorities, Cyberattacks, and the pivot in customer buying patterns to prefer Trusted, Responsible and Sustainable Products. This effectively means enterprises need to look at Security and Compliance by design – which is best implemented by transitioning to Systems of Insights and Compliance.

Skills and Talent are the new currency for the business. It is critical to capture the knowledge and experiences across the company both from a perspective of improving productivity and faster time to market.

Many enterprises implemented Learning Management Systems (LMS) in some shape or form. Still, they were seen as a secondary system for Talent Retention and tracking employee training. But to succeed in this new fast-paced innovation, businesses should now plan to implement Systems of Knowledge and Learning (SoKL).

SoKL reduces the costs of inefficiency by making company knowledge more available, accessible, and accurate.

Some of the benefits of Systems of knowledge and learning are:

Digital transformation has revolutionized businesses and IT Systems that support decision-making. Building digital Resilience into every aspect of IT infrastructure, systems, and software will enable organizations to rapidly meet changing market and customer needs and create sustainable competitive advantages in this new reality.

To be successful in the hyper-digitally dynamic ecosystem, enterprises need to relook at their Business IT systems and transform the outdated Systems of Records, System of Engagements taxonomy to differentiated Systems of Records and Intelligence, Systems of Engagement and Experience (SoEE), Systems of Innovation (SoI), Systems of Insights and Compliance (SoIC) and System of Knowledge and Learning (SoKL).

Tags: Cloud, Digital Transformation, Digital Twins

How AI Democratization Helped Against COVID-19

How AI Democratization Helped Against COVID-19

AI not only helped in data gathering but also in data processing, data analyses, number crunching, genome sequencing, and making the all-important automated protein molecule binding prediction. AI’s use will not end with the vaccine’s discovery and distribution; it will be used to study the side effects in the billions of vaccinations

Many countries have rolled out coronavirus vaccines and many are conducting dry runs to check the preparedness for vaccination drives. The World Health Organisation has extended emergency use approval to the Pfizer/BioNTech vaccine. This has paved the way for developing countries, which do not have the infrastructure to run vaccine trials. They can now begin immunizing their populations against Covid-19.

The world was quick to realize the importance of coming together to share genome sequencing data and other technical know-how, which accelerated the pace of vaccine development. However, this would have been impossible without the presence of cloud computing and Artificial Intelligence (AI).

AI helped not only in data gathering but also in data processing, data analyses, number crunching, genome sequencing, and making the all-important automated protein molecule binding prediction.

Coronavirus as we know is a cousin of Severe Acute Respiratory Syndrome (SARS) that caused many deaths over a decade ago. Researchers predicted that the pathogen may have transmitted through animals then. These kinds of predictions could only be done with the help of AI.

Sanjay Sehgal, Chairman, and CEO, MSys Group said, “In case of coronavirus, the first prediction was done by a Canadian firm BlueDot, which specializes in infectious disease investigation through AI. The firm used its AI-powered system to go through animal implant disease networks. It also used AI to collect information and predict the outfall of the virus and warned its clients to retrain their travel activities much before governments declared it officially.”

CNBC reported that BlueDot had spotted COVID-19 nine days before the World Health Organisation released its statement alerting people to the emergence of a novel coronavirus. AI and cloud computing have, in fact, been helping the pharma sector for some time now.

Gaurav Aggarwal, VP, Global Cloud Solutions Strategy and GTM Lead, Avanade, stated that the democratization of AI and Machine Learning (ML) in the public cloud has revolutionized science and engineering. The pharma industry is slowly maturing to leverage the same. “The advent of AI as an adaptive and predictive technology coupled with democratization of AI/ML, Augmented Reality/Virtual Reality (AR/VR) technology by public cloud providers such as Microsoft, Google, AWS offers the possibility for radical optimization of core research, business processes, reshaping market opportunities for pharmaceutical companies and challenging the status quo on access to affordable medicine worldwide,” he added.

The process of drug discovery requires running complex mathematical models of behavior using high-performance computing (HPC). The modern data analysis tools, such as cloud and AI, accelerated the process of identifying the molecular stimulators for further evaluation.

These tools helped in the search of antibodies that would prevent and fight Coronavirus. Additionally, research databases such as COVID Open Research Dataset (CORD-19) powered by AI helped researchers in their studies.

These technologies aren’t 100% accurate though. In 2008, Google launched an AI-powered service to track the flu outbreak using SEO and tracing people’s search queries. The data collated comprised people’s supermarket purchases, browsing patterns, and the theme and rate of private messages.

“Though Google’s AI service predicted the flu outbreak much before government and its agencies, its reports had to be pulled down after being found that the service had been consistently over-estimating the pervasiveness of the disease,” Sehgal pointed out.

Based on case studies such as these, it should be noted that AI-run algorithms can help simplify the huge amount of data from several experiments that help in discovering the patterns that a human brain might miss to spot, but in the end, AI still cannot predict the success of vaccine on humans. “We will have to wait and watch how the vaccine and its effects unfold,” Sehgal said.

While we can never expect overnight success when dealing with something as complex as vaccine development, but we can act now by using AI, ML, and public cloud to optimize the overall process and remove some of the constraints and bottlenecks. “Amplifying progress in creating new medications for diseases is among the most profound near-term objectives of AI and Covid-19 vaccines availability in less than 12 months, is an example of how AI can help in crisis response,” said Aggarwal.

The use of AI is not going to end with the discovery and distribution of the vaccine. It is also going to be used to study the side effects of the billions of vaccinations. Fortune recently reported that the UK arm of Genpact has been asked to design a machine learning system that can ingest reports of side effects and pick up on potential safety concerns.

AI to study side effects of drugs has been the focus of academic researchers for several years. Many governments, apart from the UK, are also using AI to study coronavirus vaccine side effects. The quick rollout of the vaccine has proved to be a huge success for AI and ML as it has paved the way for greater use of these tools in the health and pharma sector.

Tags: Cloud, COVID19, Digital Twins

Systematic and Chaotic Testing: A Way to Achieve Cloud Resilience

Systematic and Chaotic Testing: A Way to Achieve Cloud Resilience

In today’s digital technology era where downtime translates to shut down, it is imperative to build resilient cloud structures. For example, in the pandemic, IT maintenance teams can no longer be on-premises to reboot any server in the data center. This may lead to a big hindrance in accessing all the data or software, putting a halt on productivity, and creating overall business loss if the on-premises hardware is down. However, the solution here would be to transmit all your IT operations to cloud infrastructure that ensures security by rendering 24/7, round-the-clock tech support by remote members. Cloud essentially poses as a savior here.

Recently, companies have been fully utilizing the cloud potency, and hence, observability and resilience of cloud operations become imperative as downtime now equates to disconnection and business loss.

Imagining a cloud failure in today’s technology-driven business economy would be disastrous. Any faults and disruption will lead to a domino effect, hampering the company’s system performances. Hence, it becomes essential for organizations and companies to build resilience into their cloud structures through chaotic and systematic testing. In this blog, I will take you through what resilience and observability mean, and why resilience and chaos testing are vital to avoid downtimes.

To avoid cloud failure, enterprises must build resilience into their cloud architecture by testing it in continuous and chaotic ways.

Observability can be understood through two lenses. One is through control theory, which explains observability as the process of understanding the state of a system through the inference of its external outputs. Another lens explains the discipline and the approach of observability as being built to gauge uncertainties and unknowns.

It helps to understand the property of a system or an application. Observability for cloud computing is a prerequisite that leverages end-to-end monitoring across various domains, scales, and services. Observability shouldn’t be confused with monitoring, as monitoring is used to understand the root cause of problems and anomalies in applications. Monitoring tells you when something goes wrong, whereas observability helps you understand why it went wrong. They each serve a different purpose but certainly complement one another.

Observability along with resilience are needed for cloud systems to ensure less downtime, faster velocity of applications, and more.

|

Stability |

Is it on/reachable? |

|

Reliability |

Will it work the way it should consistently and when we need it to? |

|

Availability |

Is it reliably accessible from anywhere, any time? |

|

Resilience |

How does the system respond to challenges so that its available reliably? |

Every enterprise migrating to cloud infrastructure should ensure and test its systems for stability, reliability, availability, and resilience, with resilience being at the top of the hierarchy. Stability is to ensure that the systems and servers do not crash often; availability ensures system uptime by distributing applications across different locations to ease the workload; reliability ensures that cloud systems are efficiently functioning and available. But, if the enterprise wants to tackle unforeseen problems, then constantly testing resilience becomes indispensable.

Resilience is the expectation that something will go wrong and that the system is tested in a way to address and maneuver itself to tackle that problem. The resilience of a system isn’t automatically achieved. A resilient system acknowledges complex systems and problems and works to progressively take steps to counter errors. It requires constant testing to reduce the impact of a problem or a failure. Continuous testing avoids cloud failure, assuring higher performance and efficiency.

Resilience can be achieved through site resilient design and leveraging systematic testing approaches like chaos testing, etc.

Conventional testing ensures a seamless setup and migration of applications into cloud systems and additionally monitors that they perform and work efficiently. This is adequate to ensure that the cloud system does not change application performance and functions in accordance with design considerations.

Conventional testing doesn’t suffice as it is inefficient in uncovering underlying hidden architectural issues and anomalies. Some of the faults appear dormant as they only become visible when specific conditions are triggered.

“We see a faster rate of evolution in the digital space. Cloud lets us scale up at the pace of Moore’s Law, but also scale out rapidly and use less infrastructure” says Scott Guthrie on the future and high promises of cloud. As a result of the pandemic and everyone being forced to work from home, there has not been a surge in cloud investments. But, due to this unprecedented demand, all hyperscalers had to bring in throttling and prioritization controls, which is against the on-demand elasticity principle of the public cloud.

The public cloud isn’t invincible when it comes to outages and downtime. For example, the recent Google outage that halted multiple Google services like Gmail and Youtube showcases how the public cloud isn’t necessarily free of system downtimes either. Hence, I would say the pandemic has added a couple of additional perspectives to resilient cloud systems:

The pandemic has highlighted the value of continuous and chaotic testing of even resilient cloud systems. A resilient and thoroughly tested system will be able to manage that extra congested traffic in a secure, seamless, and stable way. In order to detect the unknowns, chaos testing and chaos engineering are needed.

In the public cloud world, architecting for application resiliency is more critical due to the gaps in base capabilities provided by cloud providers, the multi-tier/multiple technology infrastructure, and the distributed nature of cloud systems. This can cause cloud applications to fail in unpredictable ways even though the underlying infrastructure availability and resiliency are provided by the cloud provider.

To establish a good base for application resiliency, during design the cloud engineers should adopt the following strategies to test, evaluate and characterize application layer resilience:

By adopting an architecture-driven testing approach, organizations can gain insights into the base level of cloud application resiliency well before going live and they can allot sufficient time for performance remediation activities. But you still would need to test the application for unknown failure and aspects of multiple failure points in cloud-native application design.

Chaos testing is an approach that intentionally induces stress and anomalies into the cloud structure to systematically test the resilience of the system.

Firstly, let me make it clear that chaos testing is not a replacement for actual testing systems. It’s just another way to gauge errors. By introducing degradations to the system, IT teams can see what happens and how it reacts. But, most importantly it helps them to gauge the gaps in the observability and resilience of the system — the things that went under the radar initially.

This robust testing approach was first emulated by Netflix during their migration to cloud systems back in 2011, and since then, it has effectively established this method. Chaos testing brings to light inefficiencies and ushers the development team to change, measure, and improve resilience, and it helps cloud architects to better understand and change their design.

Constant, systematic, and chaotic testing increase the resilience of cloud infrastructure, which effectively enhances the systems' resilience and ultimately boosts the confidence of managerial and operational teams in the systems that they’re building.

A resilient enterprise must create resilient IT systems partly or entirely on cloud infrastructure.

Using chaos and site reliability engineering helps enterprises to be resilient across:

To establish complete application resiliency, in addition to earlier mentioned cloud application design aspects, the solution architect needs to adopt architecture patterns that allow you to inject specific faults to trigger internal errors which simulate failures during the development and testing phase.

Some of the common examples of fault triggers are delay in response, resource-hogging, network outages, transient conditions, extreme actions by users, and many more.

Chaos testing can be done by introducing an anomaly into any seven layers of the cloud structure that helps you to assess the impact on resilience.

When Netflix successfully announced its resiliency tool — Chaos Monkey in 2011 — many developing teams adopted it for chaos engineering test systems. There’s another tool test system developed by software engineers called Gremlin that essentially does the same thing. But, if you’re looking to perform a chaos test in the current context of COVID-19, you can do so by using GameDay. This stimulates an anomaly wherein there’s a sudden increase in traffic; for example, customers accessing a mobile application at the same time. The goal of GameDay is to not just test the resilience but also enhance the reliability of the system.

The steps you need to take to ensure a successful chaos testing are the following:

Other specific ways to induce a faulty attack and sequence on the system could be:

In today’s digital age where cloud transition and cloud usage is surging, it becomes imperative to enhance cloud resilience for the effective performance of your applications. Continuous and systematic testing is imperative in the life cycle of a project, but also to ensure cloud resiliency at a time where even the public cloud is over-burdened. By preventing lengthy outages and future disruptions, businesses save significant costs, goodwill, and additionally, assure service durability for customers. Chaos engineering, therefore, becomes a must for large-scale distributed systems.

Tags: Climate Change, Cloud, Digital Twins

Changing The AI Landscape Through Professionalization

Changing The AI Landscape Through Professionalization

Artificial Intelligence has been the talk of the town for a while. But, why does AI matter? How can an organization successfully scale AI? And, what role does professionalization play in the process of successful AI deployment? Read this blog to know more about all things the data-driven AI landscape.

In the past three years, we have seen companies spend more than $300B on AI applications, and this has turned a spotlight on the AI landscape, making it a high-stakes business priority.

According to the Forrester report, organizations that scale AI are 7x more likely to be the fastest-growing businesses in their industry. In a study by Accenture, it was found that 75% of global executives believe that if they don’t scale AI, they risk going out of business in just 5 years.

While scaling AI is crucial, most companies are still at the stage of running pilots and experimenting and struggling in achieving the value they expected.

In a study by Accenture, 84% of C-suite executives recognize the need to leverage AI to achieve their business goals. AI applications, with the help of machine learning and deep learning, can utilize the data in real-time and adapt to new changes to ensure that the business benefit is compounded. In this way, AI enables businesses to ensure agility with a regular stream of insights to drive innovation and competitive advantage.

As the innovation in the AI landscape progresses, we are inching towards an era where algorithms tell us all about our tastes and preferences. Looking at this, we can say that AI in a leadership position doesn’t seem like a wild fantasy anymore.

The Covid-19 pandemic has left organizations vulnerable and has exposed their daily operations. This has alleviated the need for real-time insights and has opened our eyes to the gaps in the capabilities to access, mobilize and utilize data. Additionally, the air around AI is still not clear and this is causing challenges for business leaders who are geared up to scale with AI but are yet to introduce their teams to the “scale or fail” approach.

To scale business processes, organizations must cultivate confidence in AI and design the right governance structure to allow an ethical collaboration between humans and machines. Additionally, it is important to define business and technical challenges that AI can help solve, and the efficiencies for stakeholders across organizations that AI can help achieve. Based on these, C-suite executives should prioritize the following technology and human capital investments to achieve their long-term goals:

Here, we talk about one of the critical investments for an organization – Human Capital. It is necessary to create a company of believers and for that, an organization needs to leverage its goal of data-driven reinvention.

Organizations should work with data architects, business owners, and solution architects to develop their AI strategy underpinned by data strategy, data taxonomy, and analyzing the value that their company can and wishes to create. For “Establishing a Data-Driven culture is the key—and often the biggest challenge—to scaling artificial intelligence across your organization.”

While your technology enables business, your workforce is the essential driving force. It is crucial to democratize data and AI literacy by encouraging skilling, upskilling, and reskilling. Resources in the organization would need to change their mindset from experience-based, leadership-driven decision making to data-driven decision making, where employees augment their intuition and judgment with AI algorithms’ recommendations to arrive at the best answers that either humans or machines could reach on their own.

My recommendation would be to carve out “System of Knowledge and Learning” as a separate stream in overall Enterprise Architecture, along with System of Records, Systems of Engagement and Experiences, Systems of Innovation and Insight.

AI and data literacy will help in increasing employee satisfaction because the organization is allowing its workforce to identify new areas for professional development. This culture aims to educate employees to adopt an “out of the box” approach to facing rapid and unprecedented changes.

Clients, today, need organizations that value simplification of their system and vendor ecosystem. Enterprises should prioritize choosing the right AI/ML Technology provider partner, like Microsoft, with a capable partner and ISV ecosystem. To simplify these ecosystems, an organization needs to identify the functional gaps that exist, evaluate the applications that align the business strategy, and streamline the infrastructure for ongoing operations.

Who doesn’t hate a typical case of Chinese whispers? Organizations need to define a common taxonomy of business terms, including the KPI, ORA, leading indicators, and domain model. This should be implemented to avoid the need for an interpreter between 2 different users so that everyone in the business (including the extended partner ecosystem in the supply chain) speaks the same language and makes the right decision without any confusion. This Unified taxonomy should be pushed through consistently across “System of Knowledge and Learning”, System of Records, Systems of Engagement and Experiences, and Systems of Innovation and Insight.

More data is not always better. In a world where data is proliferating and data begets more data, it can be tempting to gather more and more. Having a strong data strategy ensures you’re curating the right data to deliver the desired outcome and then capturing its insights to fuel an AI strategy that delivers that outcome at speed and scale.

In a study by Accenture, three out of four C-suite leaders believed that if they fail to scale AI in the coming years, they will risk their business. As professionalization is the precursor to successful AI scaling, this has encouraged organizations to employ professionalization techniques like establishing multidisciplinary teams and clear lines of accountability.

To fuel the need for AI scaling, the pandemic has sharpened the contrast between those who have professionalized their AI capabilities and those who have not. Businesses are competing against each other to embrace new data capabilities to return to sustainable growth, which is possible through successful professionalization.

a.) When organizations adopt a professionalized approach of deploying trained, interdisciplinary teams, to work on these applications, you can successfully maximize the value of your AI investment.

b.) Professionalization helps organizations to achieve consistency in results when performing the same or similar actions in the future. Trained data practitioners build cutting-edge technologies across use cases by leveraging repeatability.

c.) Professionalization of AI processes contributes to making technological applications more ethical and transparent. This helps in building a culture that encourages trust. Companies need accountable processes to leverage successful responsible AI.

There is a lack of consensus between our world leaders and we are not paying enough attention to training our leaders. This includes good leadership education for our business leaders, our political leaders, and our societal leaders. While scaling AI, many executives struggle when it comes to making sense of the business cases for how AI can bring value to their organizations. In the current world, these leaders are following a herd of their contemporaries who have referred to surveys that highlight the importance of engaging in AI adoption. But building a unique business case is not headlining their priority.

The need of the hour dictates that our leaders can adapt and be agile to cope with unprecedented circumstances. Leadership needs to define AI value for today—with a vision for tomorrow.

AI will become the new co-worker. It will be critical for organizations to clearly define wherein the loop of the business process should they automate, where should the depend solely on machines, and where should they ensure collaboration between humans and machines to make sure that automation and the use of AI don’t lead to a work culture where humans don’t feel like they are the subordinates of the machines. Humans believe in building a culture where they communicate and represent the values of the company to create business value.

Leadership is about dealing with change. You need to understand what it means to be a human – you can have human concerns, taking into account that you can be compassionate, and you can be humane. At the same time, leaders should be able to imagine strategies for collaboration between machines and humans. This collaboration will be used to build strategies to combat the unprecedented and to brainstorm ways in which processes can be adjusted to create the same value. A leader needs to be able to make an abstraction of this, and AI is not able to do this.

With a long-term view, some of the other aspects that organization needs to plan for Scaling AI are:

a.) Transition from siloed work to interdisciplinary collaboration, where business, operational, IT, and analytics experts work side by side, by bringing a diversity of perspectives and ensuring initiatives address organizational priorities.

b.) Establish strong AIOps practice for managing processes for developing, deploying, and governance

c.) Shift from traditional leader-only decisions, rigid and risk-averse to agile, experimental, and adaptable mindset by creating a minimum viable product in weeks rather than months and embracing the test-and-learn mindset.

d.) Define and follow the Ethical AI framework and principles

e.) Ensure Data Security and Trust in the data

f.) Organize for scale – divide key roles between a central “Analytics Hub” (typically led by a chief data officer) and “spokes” (business units, functions, or geographies).

g.) Reinforce the change – With most AI transformations taking 2-3 years to complete, leaders must also take steps to keep the momentum for AI going during the journey by tracking the adoption, celebrating small successes, and providing incentives for change.

The AI landscape is dynamic thanks to the constant technological innovations and C-suite executives recognize the need to leverage AI for a data-driven reinvention. The secret to scaling AI is cultivating confidence in AI and designing the right governance structure to allow an ethical collaboration between humans and machines.

Professionalization is an integral part of scaling your AI and data practices. Enterprises that have leveraged professionalization to scale their AI processes are leading their industry when compared to their contemporaries who are still deliberating over ways to adopt responsible AI. By a clear understanding of what professionalization can do for the AI landscape, exploring the benefits, and employing correct leadership who can successfully delegate composite AI, an organization can make a considerable mark in the field of technological innovations.

How does your organization employ professionalization to scale AI processes?

Tags: AI, Cloud, Digital Twins

Edge Computing: The Future of Cloud

Edge Computing: The Future of Cloud

The IDC forecasts the global edge computing market to reach $250 billion by 2024, with a compounded annual growth of 12.5%. No wonder the industry is talking about Edge Computing.

Edge computing is one of the “new revolutionary technologies” that can change organizations wanting to break free from previous limitations of traditional cloud-based networks. The next 12–18 months will prove to be the natural inflection for edge computing. Practical applications are finally emerging where this architecture can bring real benefits.

91% of our data today is created and processed in centralized data centers. Cloud computing will continue to contribute to businesses regarding cost optimization, agility, resiliency, and innovation catalyst. But in the future, the “Internet of Behaviors (IoB)” will power the next level of growth with endless new possibilities to re-imagine the products & services, user experiences, and operational excellence. The IoB is one of the most sought-after and spoken-about strategic technology trends of 2021. As per Gartner, the IoB has ethical and societal implications, depending on the goals and outcomes of individual uses. It is also concerned with utilizing data to change behaviors. For instance, with increased technologies that quickly gather dust, information can influence behaviors from feedback loops during times such as COVID-19 monitoring.

IoT, IIoT, AI, ML, Digital Twin, and Edge computing are at the core of the Internet of Behaviors. As per Gartner’s research, about 75% of all data will require analysis and action at the Edge by 2022. Organizations have been debating what separates edge computing from the other traditional data processing solutions. Also, whether it is excellent for their business and to what extent is a hot topic.

The foundational principles of edge computing are relatively simple to comprehend but understanding its benefits can be complex. Edge computing can provide a direct on-ramp to a business’ cloud platform of choice and assists in achieving flexibility to facilitate a seamless IT infrastructure.

It is a distributed computing model where computing is conducted close to the geographical location of the data collection and analysis center, overusing a centralized server or Cloud. The improved infrastructure uses sensors to gather data, while the edge servers safely process data on-site in real-time.

By miniaturizing the processing and storage tech, the network architecture landscape has experienced a massive shift in the right direction, where businesses can worry less about data security. The present-day IoT devices can quickly gather, store, and process vast amounts of data than they could before. This creates more opportunities for businesses to integrate and update their networks to relocate their processing functions in proximity to the data gathered at the network edge to be assessed and applied in real-time closer to the intended users.

Edge computing is essential now because it is an upgrade for global businesses to improve their operational efficiency, boost their performance, and ensure data safety. It will also facilitate the automation of all core business processes and bring about the “always-on” feature. Edge computing holds the key to achieving total digital transformation of conducting business more efficiently.

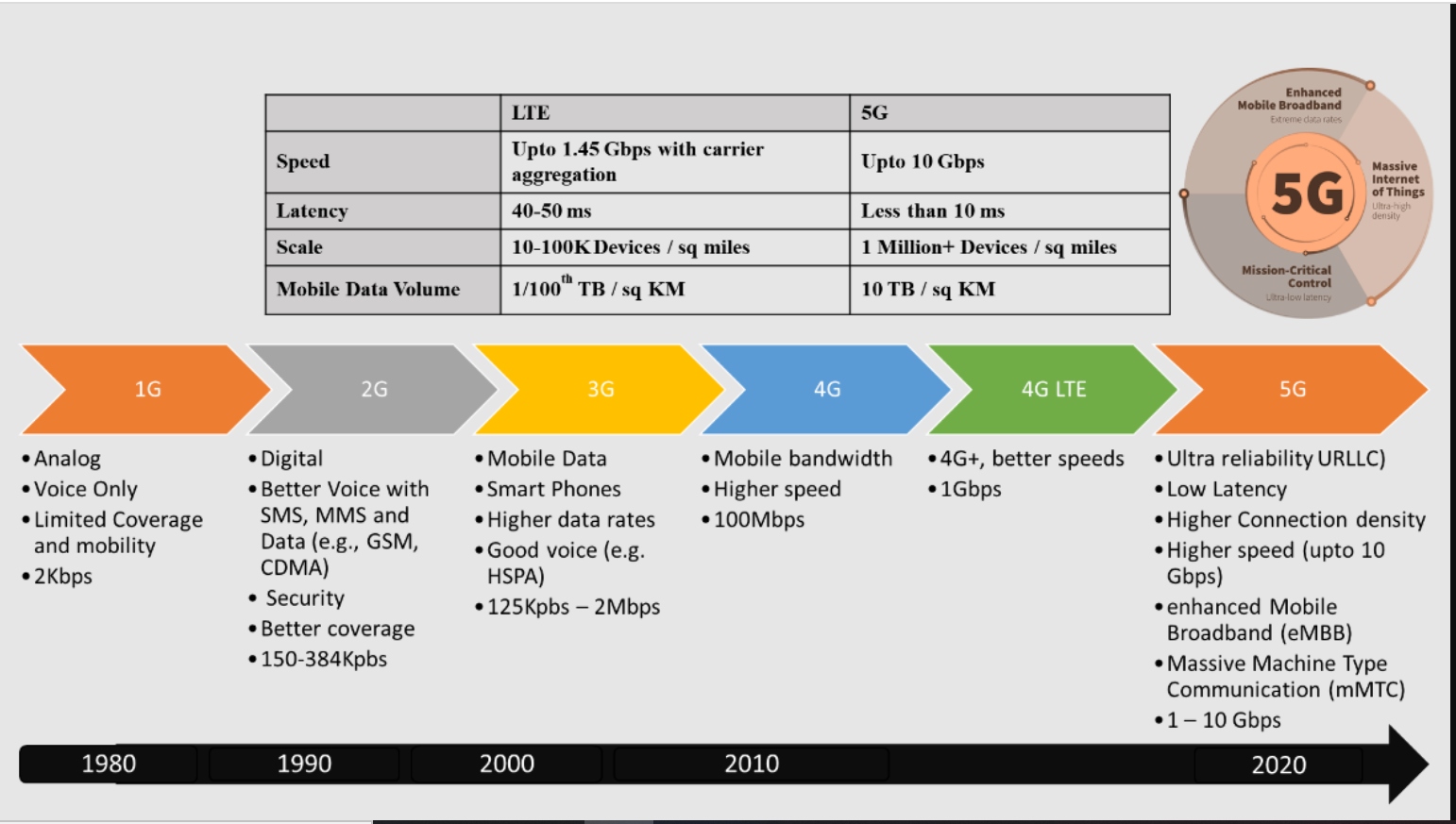

Edge technology is relevant today as it’s empowered by new technologies such as 5G, Digital Twin, and Cloud-native Application, Database, and Integration platforms.

By 2025, we will witness 1.2 billion 5G connections covering 34% of the global population. Highly reliable low-latency is the new currency of the networking universe, underpinning new capabilities in many previously impossible industries. With 5G, we’ll see a whole new range of applications enabled by the low-latency of 5G and the proliferation of edge computing, transforming the art of the possible.

Moreover, Private 5G Network will fuel Edge computing and push enterprises to the Edge. Forrester sees immediate value in private 5G — a network dedicated to a specific business or locale like a warehouse, shipyard, or factor.

Response time or speed of response is an absolute necessity for the AI/ML-powered solution, especially if deployed in a remote location or the user is on the move. If there is even a millisecond of delay in the algorithms of a remote patient monitoring system at hospitals, it could cost someone their life. Companies that render data-driven services cannot afford to lag in speed as it can have severe consequences to the brand reputation and customer’s quality of experience.

Container technology like Docker and Kubernetes allows companies to run prepackaged software containers more quickly, reliably, and efficiently. Armed with these technologies, companies can set up and scale Micro Clouds wherever and however they want.

Service and Data mesh facilitate a channel to release and query data or services distributed through datastores and containers across the Edge, making it a critical enabler. It also allows bulk queries for the entire population within the Edge over each device, bringing greater ease.

Software-defined networking enables the configuration of the overlay networks by users, making it simpler to customize routing and bandwidth to determine a way to connect edge devices and the Cloud.

The digital twin is a crucial enabler responsible for organizing physical-to-digital and cloud-to-edge, letting domain experts (not just software engineers) configure their applications to observe, think and act according to the Edge.

The maturity of IIoT platforms and Edge AI pave the way for IT-OT convergence, thereby offering an innovation advantage to the business.

The industrial Internet of Things or IIoT sensors provides a more significant business advantage such as greater productivity and efficiency and cost reduction for data collection, analysis, and exchange.

MES transforms the topology and the architecture of mobile networks from a pure communication network for voice and data to an application platform for services. MEC complements and enables the service environment that will characterize 5G. Example: Connected Cars, Industry 4.0, Remote Patient Monitoring, eHealth.

XR represents an immersive interface for work collaboration in a virtualized environment. With the help of edge computing, these experiences become even more detailed and interactive.

Innovation for Heterogeneous hardware and ruggedized HCI / Edge devices is making Edge computing more pervasive as they process a greater volume of data quickly by using lesser power. Integrating this specialized hardware on Edge enables efficient computation within physical environments while accelerating the response rates.

Hyperscalers, along with 5G and chip OEM, are innovating at speed to capture the market. Azure Percept is Microsoft’s latest edge computing platform, bringing the best hardware, software, and cloud services to the Edge. Azure Percept is an excellent device for makers and builders to build and prototype intelligent IoT applications powered by Azure Cognitive Services and Azure Machine Learning Services.

New privacy-oriented technologies include techniques and hardware that enable data to be processed without exposing all the problematic aspects. Data is encrypted during storage and transmission. However, privacy-preserving tech is bound to safeguard data even in the computing stage, making it more reliable for other lines of the organization and its partners, especially when required to be processed on Edge.

Robotics can be configured to act following signals and updates by the Edge. This has been seen in life-saving surgical procedures where agility and precision are of utmost importance. Both Edge and Cloud are of utmost importance to control the robot’s moves and executions through stored data while ensuring no lag between movements.

From what we are witnessing so far, Edge Computing represents the future of a cloud technology extension by making it bulletproof. Discussed below are a few ways we may see this continuum manifest:

A substantial amount of computing is already being carried out on Edge at manufacturing units, hospitals, and retail sectors, where the majority operate on the most sensitive data. It also powers the most critical systems that are required to function safely and reliably. Edge can facilitate the driving of decisions on these core functional systems. When there is the opportunity for AI and IoT to tap into these systems.

Understanding and assuming control of the Edge also gives you control of the closest point of data action. Utilizing this unique opportunity to relay differentiated services can help a business in great ventures with valuable partnerships that branch out.

For instance, edge computing is beneficial to an automobile manufacturer and the insurance vendor, the companies that provide energy and utilities, and the city planners. Edge computing can offer your business new data, and you can offer more excellent value to your partners, which is a win-win scenario. The new edge-friendly data and services are processed in the Cloud, integrating with other organizational applications and data.

Edge computing is the need of the hour to maximize the returns of the next-generation technologies, as the current scope needs to be broadened anyway. As time passes, so does the need for a better technological support system for data processing that is faster, smarter, and more efficient. Their collective effect can give new features such as voice input to your vehicle or remorse operations using teleoperation. Edge facilitates the control and programmability required to link these capabilities into an organization.

Today’s Cloud world is characterized by limited mega data centers in remote locations. Data is traversing from one device to the Cloud and back to execute a computation or data analysis. Data typically make this round trip traveling at 50 to 100 milliseconds over today’s 4G networks.

Data traveling over 5G at less than five milliseconds facilitates the edge cloud and the ability to create new services that it empowers.

Decentralizing traditional IT infrastructure is at the core of edge computing and complementary to centralized cloud computing.

One of the three origins of distributed Cloud is edge computing, making it highly relevant for prospects. CIOs can use distributed cloud models to target location-dependent cloud use cases required in the future. As per Gartner, by 2024, most cloud service platforms will facilitate at least a few distributed cloud services that execute at the point of need. Distributed Cloud retains the benefits of cloud computing. However, it extends the range and uses cases for the Cloud, making it a better version.

Today, everything is getting “smart” / “intelligent” because of technology. From home appliances and automobiles to industrial equipment, substantial products and services are employing the aid of AI to interpret commands, analyze data, recognize patterns, and make decisions for us. Most of the processing that powers today’s intelligent products is handled remotely (in the Cloud or a data center), where there’s enough computing power to run the required algorithms.

Edge, combined with 5G’s higher bandwidth and Distributed Cloud’s low-latency computation, is the future that was imagined less than a decade ago and is within our reach, now more than ever. What is impressive about this technology is how several technological leaps and bounds are more significant than we could imagine. To think about it, edge computing is just like science fiction materializing, only that the experience is full of greater possibilities and expansion. Not only will your business be facing a new generation of success, using the Edge will help you run your organization more efficiently, create more incredible innovations at a fast-paced process faster and derive better value from affiliations.

Tags: Cloud, COVID19, Digital Twins

Data Governance — A QuickStart With Azure Purview

Data Governance — A QuickStart With Azure Purview

When we talk about assets on the balance sheet, Data deserves its row” — Satya Nadella — Microsoft CEO.

As an organization, you have a big question in front of you “How to handle user’s data?”, it can be either used to support your business, or it can be used to give your end-users a better experience.

With enough data and a roadmap to use that data effectively, you can accelerate your company’s growth. Using Data effectively is incomplete without the term data governance. Here’s every “Why? How? Where?” you need to know about Data governance and Azure Purview.

Data is the new currency of the current digital age. But data within organizations is growing at exponential rates. 90% of data today was created in just the last two years. And by 2025, 80% of data will be unstructured data. This influx of data has increased the organization and challenges many folds.

To get real business value from Data, the organization needs to know:

Lack of understanding of any of the above can create operational inefficiencies, confusion related to Data and information being distributed internally and externally, and poor business decisions based on flawed or misunderstood data. Well, that’s only a part of the problem set as regulators are cracking down on companies for any compliance data privacy and data sovereignty (and I won’t be surprised if soon we start seeing regulations around the ethical use of data).

According to Gartner, “Data governance is the specification of decision rights and an accountability framework to ensure the appropriate behavior in the valuation, creation, consumption, and control of data and analytics.”

Data governance helps ensure the data is usable, accessible, and protected. It also helps in more informed data analytics because an organization can come to a well-informed conclusion. Data governance also improves the consistency of the data, removes redundancies, and helps make sense of garbage data, which can save an organization from a big decision-making problem.

Data governance also allows organizations with:

Microsoft Azure Purview is a fully managed, unified data governance service that helps you manage and govern your on-premises, multi-cloud, and SaaS data. Purview creates a holistic, up-to-date map of your data landscape with automated data discovery, sensitive data classification, and end-to-end data lineage. Purview empowers data consumers to find valuable, trustworthy data.

It’s built over Apache Atlas, an open-source project for metadata management and governance for data assets. Azure purview also has a data share mechanism that securely shares data with external business partners without setting up extra FTP nodes or creating redundant large datasets. Azure Purview does not move or store customer data out of the region in which it is deployed.

There is currently no licensing cost associated with Purview; you pay for what you use. The pay-per-use model offered by Microsoft as part of Public Preview is exciting for Microsoft customers looking to move quickly without having to create a business case to secure an additional budget. Azure Purview reduces costs on multiple fronts, including cutting down on manual and custom efforts to discover and classify data and eliminating hidden and explicit costs of maintaining homegrown systems and Excel-based solutions.

It supports the following type of data sources at the time of writing:

Azure Purview consists of below main features:

Azure Purview Data Map provides the foundation for data discovery and effective data governance. It’s a cloud-native PaaS service that captures metadata about enterprise data present in analytics and operation systems on-premises and cloud. Purview Data Map is automatically kept up to date with a built-in automated scanning and classification system. Business users can configure and use the Purview Data Map through an intuitive UI, and developers can programmatically interact with the Data Map using open-source Apache Atlas 2.0 APIs.

Purview Data Map powers the Purview Data Catalog and Purview Data insights as unified experiences within the Purview Studio.

Data Map extracts metadata, lineage, and classifications from existing data stores. It enables you to enrich your understanding with the help of classifiers at cloud scale classify data using 100+ built-in classifiers and your custom classifiers. With Purview Data Map, organizations can centrally manage, publish and inventory metadata at cloud scale and further extend using Atlas Apache open APIs.

Label-sensitive data feature is supported consistently across the database servers, Azure, Microsoft 365, and Power BI. Along with that lets you easily integrate all your data systems using Apache Atlas Open-source APIs.

With Data Catalog, Purview enables rich data discovery with the luxury of searching business & technical terms & understanding data by browsing associated technical, business, semantic, and operational metadata.

Data catalog, along with information on the data source and interactive data lineage visualization, empowers data scientists, engineers, and analysts with business context to drive BI, analytics, AI, and machine learning initiatives.

Purview helps companies to understand their data supply chain from raw data to business insights. From a Data lineage perspective, Purview currently supports:

Using Purview Data Insights, data officers and security officers can get a bird’s eye view and, at a glance, understand what Data is actively scanned, where sensitive data is, and how it moves

The data governance component provides users a bird’s-eye view of your organization’s data landscape; by quickly determining which analytics and reports are stored. It enables stakeholders to maintain and use an organization’s data efficiently if it exists already or not. This view allows you to get crucial insights such as data distribution across environments, how Data is being moved, and where sensitive data is stored.

Purview Studio is essentially an environment created for you to work through the Azure purview services after creating an account. This studio is a central control area that allows developers, administrators, and end-users to work through Purview. This tool is the next step in the process of using Azure Purview.

Azure Purview is in its early days and has few gaps that need to be addressed. Here are few limitations of Azure Purview:

While currently, Azure Purview is not a one-shop-stop solution for enterprise-level data governance capabilities but based on the roadmap shared, it won’t be long before the Purview team pull up their socks and cover enough to make Azure Purview an enterprise-grade Data governance suite.

Azure purview is there to help you manage your data better and here’s how it’s going to help you process it and convert your data into an asset:

Azure purview allows you to catalog your data and have a customized tag over it, allowing you, the end-user, to locate better and understand it.

It also helps you maintain Data Quality in situations where your data must be complete, unique, valid, accurate, consistent, relevant, reliable, and accessible. Governance tools such as the data catalog will help you with this.

As an organization, it falls on you to provide the utmost security to end-user data. According to government laws and data mandates, the end-users can demand to remove their data from companies severs and even change its content at any given point; Azure Purview lets you create an automated process that will streamline these service requests and produce documentation required by the law.

It provides a unified map of your data assets. This helps in forming an effective data governance system.

You can run searches based on technical, business, and operational terms. One can identify the sensitivity level of the data and can understand the interactive data lineage.

Get continuous updates about the location of the data and continuous insight into its movement through your multi-layer data landscape. Along with this, Azure Purview provides you with services like a Data catalog and Business glossary.

It is a core element of any data governance software, which can scan all the data sources, identify, index, connect and classify registered users’ data sets.

It is a collection of terms with brief definitions which connect to other terms. With Business Glossary, it’s possible to automate the process of classifying the data set and annotate them with correct business terms so end-users can understand them more simply. Any business glossary is the foundation of the semantic layer that an organization uses to define a medium of communication behind its business.

With features like these, Microsoft Azure Purview allows your data to become a crucial asset.

Data Governance is a must-have solution strategy for all enterprises to use Data as assets. Data Governance is a complex solution yet a foundational pillar in any enterprise’s data journey. Data governance helps to democratize data responsibly through accessible, trusted, and connected enterprise data at scale.

Microsoft Azure Purview provides a good starting point for Cloud-native Data governance solutions. Azure Purview helps answer the who, what, when, how, where, and why of data. From the feature checkpoint of view of Azure Purview, I would say it has the potential to be a game-changer with features like Data catalog, Data insights, Data mapping, Business Glossary, Pipelines to manage your data sources and destinations.

Azure Purview has a solid potential to shape up a new Data Governance as A Service Industry (DGaaS) and open up some new opportunities for businesses to explore.

Tags: Cloud, COVID19, Digital Twins

Cloud Cost Optimization: A Pivotal Part of Cloud Strategy

Cloud Cost Optimization: A Pivotal Part of Cloud Strategy

According to Gartner, businesses will be spending about $333 billion by the end of 2022 on cloud infrastructure, and according to McKinsey, cloud spending will increase by 47% in the year 2021. These numbers are staggering and certainly depict a very positive picture here. However, cloud consumers need to assess the pay-off of such significant cloud spending.

McKinsey also reported that companies exceeded their cloud budget by 23% and that 30% of their outlays were wasted. This leads me to wonder if businesses have been able to optimize operations from their cloud investments. Whether the Cloud has just added to their costs or has it been good value for their money? And lastly, why do some companies still grapple with mismanaged costs or added costs during their cloud journey?

These pertinent questions need to be debunked in times where companies are struggling to stay afloat and are trying to mitigate their overall costs. Cloud costs don’t necessarily mean IT costs but also include certain operational and managerial costs as well.

So, how do organizations harness the cloud cost optimization journey? Let me guide you through the same in this blog.