Oct24

The pursuit of Artificial General Intelligence (AGI)—a machine capable of matching or exceeding human intellectual capabilities across diverse tasks—began over half a century ago, famously formalized at the 1956 Dartmouth workshop. Early efforts focused primarily on symbolic reasoning and logic. However, modern research, influenced by pioneers like Yann LeCun, acknowledges that accurate general intelligence must be embodied and predictive, rooted in the ability to understand and model the continuous physics of the real world. This requires bridging the gap between abstract thought and raw sensory data.

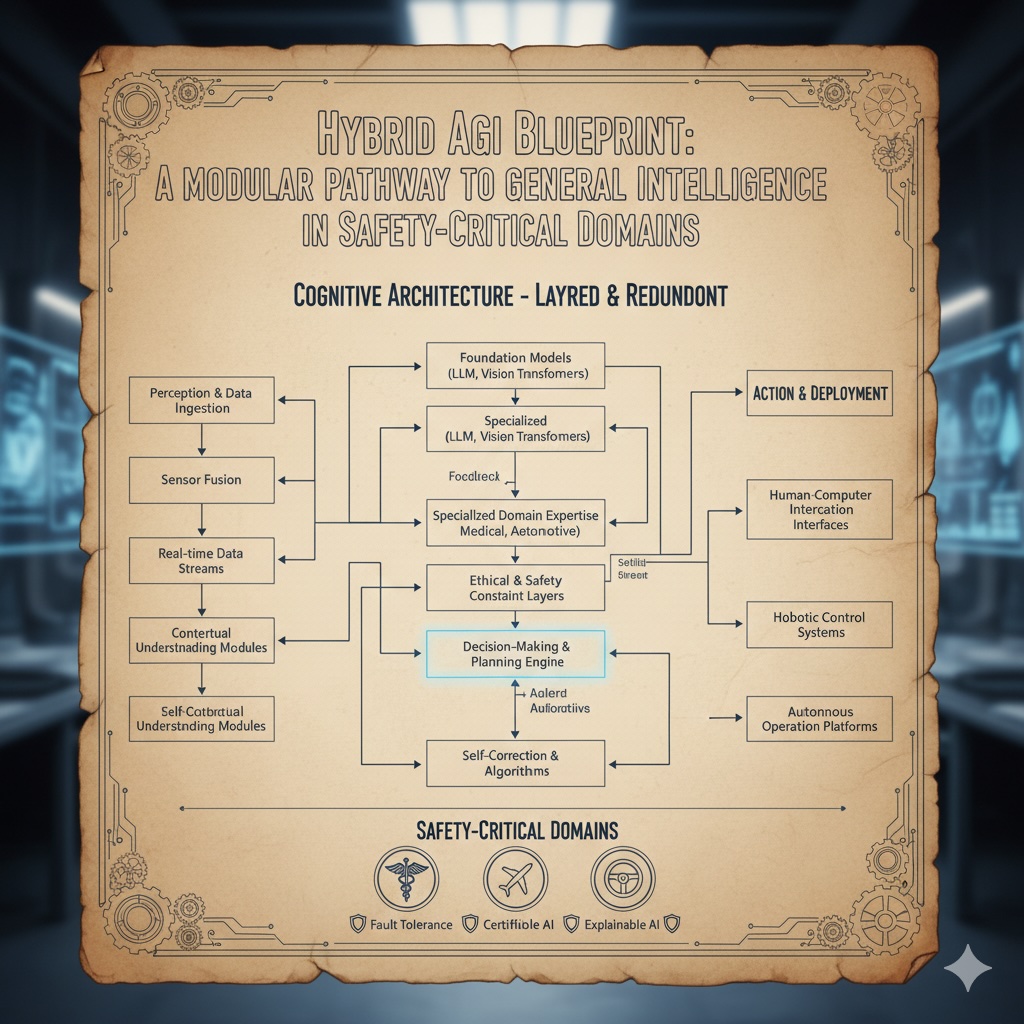

The motivation for building such robust systems is not abstract theory; it is a necessity in safety-critical domains. In fields where failure is catastrophic, such as controlling an aircraft or making a clinical diagnosis, AI must exhibit not just performance, but reliability, foresight, and ethical adherence. The monolithic, single-model approach of the past has proven insufficient for these complex demands. What is required is a comprehensive cognitive architecture that allows specialized modules to collaborate, creating a synergistic "mind" that is both highly performant and rigorously verifiable.

The following analysis presents the Hybrid AGI Blueprint, demonstrating this modular, multi-agent approach across two distinct, high-stakes environments: dynamic flight planning and life-clinical-decision-making.

The two conceptual AGI demonstration codes employ distinct models but share a common modular framework for integrating perception, reasoning, and safety.

1. Aviation AGI Demo Code (Dynamic Planning and Predictive Modelling)

This code implements a Hybrid AI Agent for Flight Planning, primarily demonstrating the ability to perceive a dynamic environment, model its causality, and perform constrained, predictive Planning.

2. Medical AGI Demo Code (Multimodal Diagnostic Reasoning and Safety Adherence)

This code implements a Multi-Agent System for Clinical Diagnostic Reasoning, focusing on synthesizing multimodal data (image and text) and ensuring the final output adheres to non-negotiable safety and clinical standards through rigorous internal validation.

The foundational design of the Hybrid AGI Blueprint rests on five pillars, initially proposed by researchers in the field to outline the components needed to achieve human-level intelligence. The mapping below illustrates how each abstract pillar is realized through concrete components in the two safety-critical domains.

|

AGI Pillar |

Definition |

Aviation Demo Mapping |

Medical Demo Mapping |

|

Pillar 1: World Models |

Systems that can build internal, predictive models of the world, distinguishing between text-based reasoning and complex physical reality. |

Implemented by the V-JEPA/CLIP system, extracting visual features from video (raw reality) and classifying the observed flight phase. |

Implemented by the I-JEPA (Conceptual) extractor, which turns raw multimodal images into "Grounded Perception Facts." |

|

Pillar 2: Autonomous Causal Learning |

The capacity to discover and utilize the underlying causal structure of a system, rather than just memorizing correlations. |

Implemented by the PLDM, explicitly trained on real-world TartanAviation trajectories to learn the transition function |

Implemented implicitly by forcing the Qwen3-VL-8B LLM to perform predictive analysis of complex outcomes (necrosis risk) based on its synthesized clinical knowledge. |

|

Pillar 3: Modular Systems (Planning) |

Systems that can reason, plan, and act coherently by efficiently managing resources (energy, time) and designing toward a verifiable goal state. |

Demonstrated by the Total Cost Function and the planning loop, which optimizes for goal proximity while minimizing fuel cost and resource expenditure. |

Demonstrated by the LLM's output synthesizing a complete, multi-stage plan (Diagnosis, Acute Management, Long-Term Strategy) for the patient. |

|

Pillar 4: Embodied Salience & Ethics |

The ability to be grounded in sensory experience, focus on what truly matters, and align ethically with human safety values. |

Implemented by integrating salience (weather data) and an Ethical Boundary Latent Vector directly into the mathematical cost function, penalizing unsafe actions. |

Implemented by the Validation Agent (Guardian), which enforces non-negotiable adherence to clinical safety standards (NEJM-grade facts). |

|

Pillar 5: Cognitive World Models (Hybrid Integration) |

The capability to combine lower-level, continuous perception with abstract, symbolic reasoning (analog-digital bridge) to achieve general problem-solving. |

The integration of continuous V-JEPA output (analog) with the symbolic DeepSeek LLM (digital/abstract reasoning) for operational assessment. |

The integration of the raw CT image (analog) with the structured, corrective linguistic input from the Prompt Engineer Agent to achieve convergence on a definitive clinical truth. |

Both demonstrations integrate low-level predictive models and high-level cognitive models. The core challenge is solved through an **Analog-Digital Integration Layer** that condenses continuous sensory data into discrete, verifiable facts. The Aviation PLDM learns physics-based transitions from real-world data. The medical LLM learns to predict complex outcomes (e.g., necrosis) based on evidence and clinical knowledge, demonstrating predictive reasoning.

The crucial convergence between the two demos is their non-negotiable adherence to safety and ethical constraints.

* Aviation enforces constraints mathematically using a Total Cost Function during its planning loop, penalizing factors like high fuel consumption and ethical deviations.

* Medicine implements constraints through an explicit, linguistic, multi-agent feedback loop. The Validation Agent acts as the Guardian, and the Prompt Engineer Agent corrects the input, forcing the primary model to converge on a safe clinical protocol.

These demos move beyond narrow AI by integrating multiple cognitive functions into a single, cohesive, goal-driven system.

1. Generalization and Complexity in Safety-Critical Domains* Aviation (Flight Planning): Requires real-time predictive Planning based on dynamic causal models.

* Medicine (Clinical Decision-Making): Requires synthesizing multimodal data, abstract reasoning, and adhering to ethical/safety constraints.

2. The Modular, Multi-Agent Architecture

Both systems adopt a modular, multi-agent approach.

|

Architectural Feature |

Aviation Demo |

Medical Demo |

AGI Pillar |

|

Perception/Grounding |

Uses V-JEPA/CLIP features to generate discrete labels ("airplane landing"). |

Uses I-JEPA (conceptual) to extract definitive "Grounded Perception Facts". |

World Models & Integration (Pillars 1 & 5) |

|

Prediction/Causality |

Uses a PLDM trained on TartanAviation trajectories to forecast the next state given an action. |

Uses the Qwen3-VL-8B to perform predictive analysis of complications (e.g., necrosis/perforation risk) based on NEJM-grade facts. |

Causal Structure & Prediction (Pillar 2) |

|

Constraint/Safety |

Uses a Total Cost Function that incorporates ethical and salient variables (e.g., fuel cost, ethical boundary deviation) to guide Planning. |

Uses the Validation Agent and Prompt Engineer Agent in a feedback loop to force clinical and safety-critical adherence. |

Ethical & Modular Systems (Pillars 3 & 4) |

|

Abstract Reasoning |

Uses the DeepSeek LLM to translate technical output into a human-readable "operational assessment". |

Uses the Qwen3-VL-8B to synthesize a full clinical report, differential diagnosis, and long-term strategy. |

Cognitive World Models (Pillar 5) |

The Hybrid AGI Blueprint validates Yann LeCun's vision for AMI —the successor to LLMs. The design principles address LLM deficiencies by illustrating AMI's core tenets:

* Machines that Understand Physics: The Aviation demo's PLDM learns the continuous effects of actions on state variables. The Medical demo's LLM performs causal medical reasoning, predicting physical consequences like perforation or necrosis.

* AI that Learns from Observation and Experimentation: The Medical demo's iterative Constraint Loop forces the system to _experiment_ and learn through experience until its output aligns with clinical ground truth. The Aviation demo's MPPI planning loop serves as a rapid-experimentation system, evaluating hundreds of simulated actions to find the optimal path.

* Systems that Can Remember, Reason, and Plan Over Time: The perception layer gathers the "observation," the causal model performs planning over a time horizon, and the multi-agent system uses constraints to guide reasoning. The Medical system constructs a long-term management strategy, demonstrating deep temporal Planning.

This architecture moves AI from recognizing text patterns to building an understanding of grounded, high-stakes reality.

The simultaneous realization of these two distinct domain demos—from piloting conceptual flight paths to navigating life-critical clinical protocols—affirms a fundamental shift in the pursuit of AGI. This Hybrid AGI Blueprint is a decisive technical response to the core critiques levelled against Large Language Models by figures such as Yann LeCun.

The future of general intelligence lies not merely in human-level performance, but in deployable, trustworthy intelligence built to uphold the highest standards of safety in the complex reality of our world. This modular, hybrid architecture provides the practical, verifiable roadmap for achieving Advanced Machine Intelligence.

Keywords: Generative AI, Open Source, Agentic AI

The Orchestra Needs a Conductor: Why Multi-Model Agents Require H2E Governance

The Orchestra Needs a Conductor: Why Multi-Model Agents Require H2E Governance The Role of Memory in Modern-day Business

The Role of Memory in Modern-day Business The Architectures of Permanence: A Comparative Analysis of the "Big Three" AI Strategies (2026)

The Architectures of Permanence: A Comparative Analysis of the "Big Three" AI Strategies (2026) Friday’s Change Reflection Quote - Leadership of Change - Change Leaders Enable Generational Advancement

Friday’s Change Reflection Quote - Leadership of Change - Change Leaders Enable Generational Advancement The Corix Partners Friday Reading List - February 27, 2026

The Corix Partners Friday Reading List - February 27, 2026