Jan28

Somewhere along the way, AI became the convenient culprit.

When trust breaks, when decisions feel off, when people sense something important has shifted, AI is often blamed.

But here’s the quieter truth most leaders eventually confront.

AI didn’t break trust.

Leadership abdicated it.

Trust doesn’t disappear. It erodes

Trust rarely collapses in a single moment.

It thins.

It weakens at the edges.

It slips away quietly when decisions are made faster than they can be explained, or when responsibility becomes distributed just enough for no one to fully own it.

AI didn’t introduce this dynamic.

It simply exposed it.

The real discomfort leaders feel

When I sit with boards and executive teams, the anxiety isn’t really about technology.

It’s about accountability.

Leaders are asking questions they rarely voice out loud:

These aren’t technical questions.

They’re leadership questions.

And they surface whenever systems begin to decide with us, or for us.

Why ethics frameworks often miss the point

Many organisations respond by commissioning AI ethics principles, guidelines, or committees.

These are well-intentioned.

But too often they sit on the periphery, disconnected from how decisions actually get made.

Ethics becomes something we talk about, not something we design into everyday operations.

Trust doesn’t live in policy documents.

It lives in decision pathways.

Decision Trust Zones™

This is why I work with leaders on what I call Decision Trust Zones™.

Not as a compliance exercise.

But as a practical way to map:

The moment leaders stop treating all decisions as equal, clarity emerges.

Trust strengthens when people know who decides what, and why.

The illusion of neutrality

One of the most persistent myths about AI is neutrality.

Algorithms don’t remove bias.

They operationalise it.

They encode values, assumptions, priorities, and trade-offs, whether acknowledged or not.

When leaders say “the system decided,” what they often mean is “we didn’t slow down enough to notice the values we embedded.”

That’s not a technology failure.

That’s a governance gap.

Speed versus wisdom

AI accelerates decision-making.

Leadership must slow sense-making.

This tension is where trust is either reinforced or lost.

Organisations that chase speed without reflection gain efficiency but sacrifice legitimacy.

Those that deliberately insert human judgement at critical moments build resilience.

The difference isn’t capability.

It’s choice.

Why trust is now a strategic asset

Across finance, healthcare, government, education, and infrastructure, trust has become a form of currency.

Once lost, it’s expensive to rebuild.

Once questioned, every future decision is scrutinised more harshly.

AI didn’t create this reality.

It simply made it unavoidable.

PTFA™ and the hidden emotional layer

There’s another layer leaders underestimate.

What I call PTFA™: Past Trauma, Future Anxiety.

People don’t just worry about what AI might do.

They carry memories of previous restructures, broken promises, and opaque decisions.

AI becomes the trigger, not the cause.

Leaders who ignore this emotional residue misread resistance as ignorance, when it’s actually experience speaking.

The leadership shift that matters

The most trusted leaders I work with don’t pretend to have all the answers.

They do something far more effective.

They make decision-making visible.

They explain boundaries.

They articulate where AI supports judgement, not replaces it.

And they remain accountable, even when technology is involved.

That’s what restores trust.

A reframing worth sitting with

AI isn’t a moral agent.

Leadership is.

Systems don’t carry responsibility.

People do.

The organisations that navigate this era well won’t be the most automated.

They’ll be the most deliberate.

Not because they feared AI.

But because they understood trust doesn’t survive by accident.

Choose Forward

—

Morris Misel

By Morris Misel

Keywords: AI Ethics, Business Strategy, Leadership

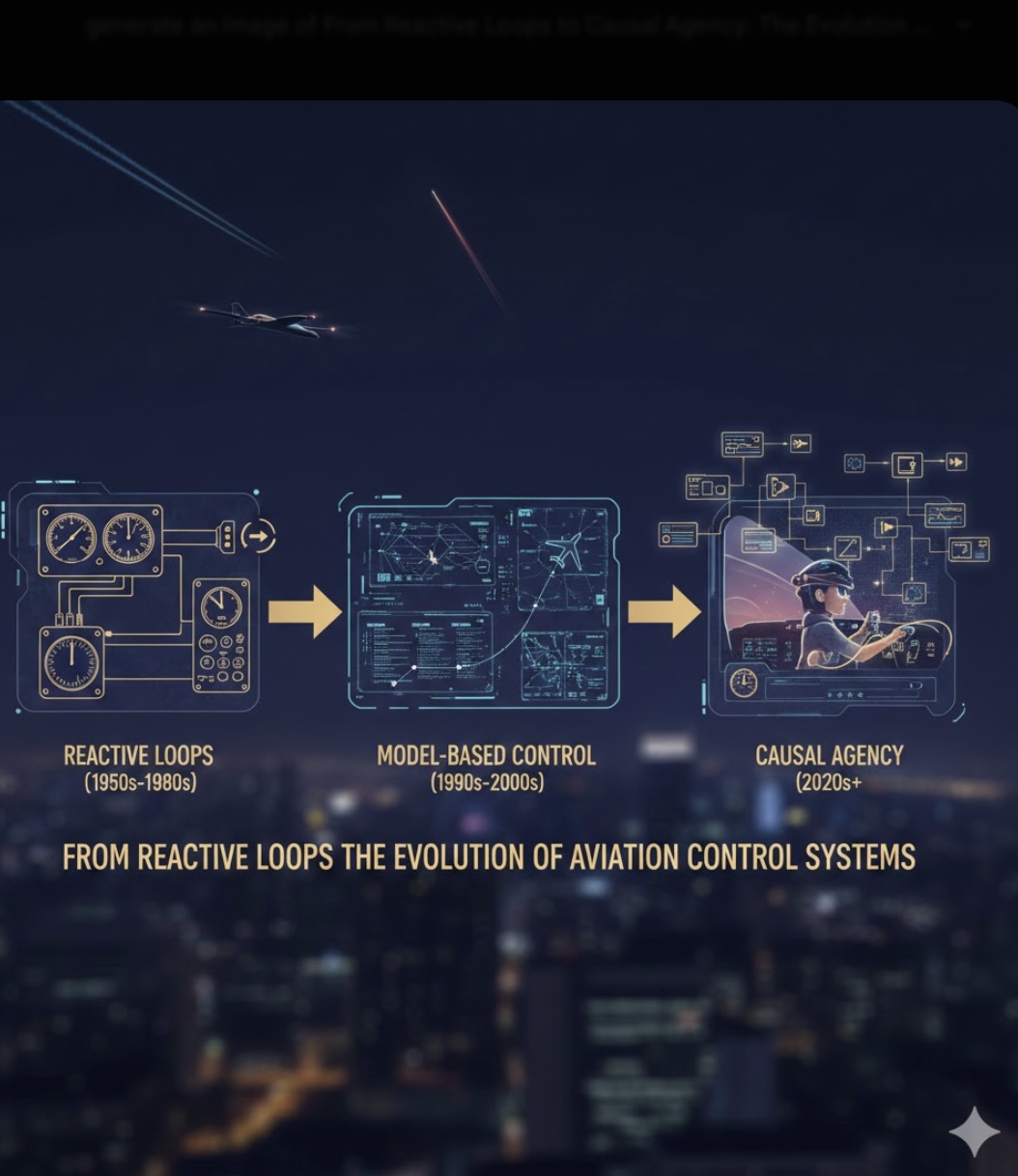

From Reactive Loops to Causal Agency: The Evolution of Aviation Control Systems

From Reactive Loops to Causal Agency: The Evolution of Aviation Control Systems What’s really holding your succession plan back: a lack of successors—or a lack of strategy?

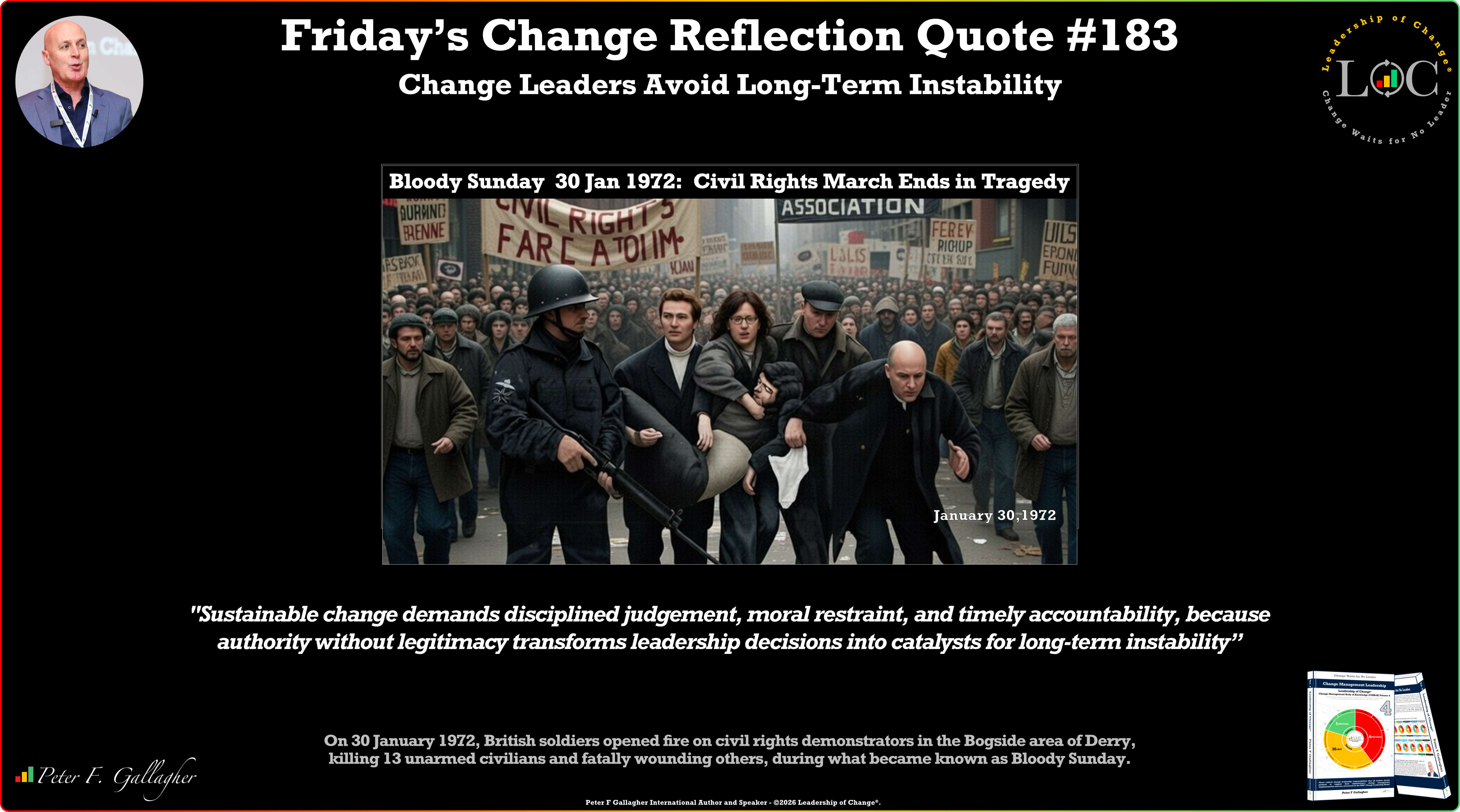

What’s really holding your succession plan back: a lack of successors—or a lack of strategy? Friday’s Change Reflection Quote - Leadership of Change - Change Leaders Avoid Long-Term Instability

Friday’s Change Reflection Quote - Leadership of Change - Change Leaders Avoid Long-Term Instability The Corix Partners Friday Reading List - January 30, 2026

The Corix Partners Friday Reading List - January 30, 2026 Why Long Duration Energy Storage Has Finally Reached Scale

Why Long Duration Energy Storage Has Finally Reached Scale