Nov02

The journey of Large Language Models (LLMs) from impressive research feats to enterprise-grade tools has been marked by a fundamental challenge: bridging the gap between vast linguistic knowledge and verifiable, real-time action. Early generations of LLMs, despite their fluency, were limited by static training data and a tendency to "hallucinate" facts. This critical deficiency motivated an architectural shift. The answer lay not in building larger models, but in augmenting them with external, searchable knowledge and complex decision-making capabilities. This imperative gave rise to the Agentic RAG (Retrieval-Augmented Generation) Tech Stack, a nine-level architecture that transforms inert models into reliable, autonomous agents. Ranging from Level 0 (Infrastructure) to Level 8 (Governance), this stack reveals that successful, trustworthy AI is fundamentally an engineering challenge—one that requires a cohesive, multi-level system to deliver grounded intelligence and measurable integrity.

To understand this architectural challenge, the stack is broken down into nine essential levels:

Level 8: Safety & Governance

Focus: Ensuring ethical, safe, and compliant deployment.

Tools: Langfuse, arize, Guardrails AI, NELM.

Level 7: Memory & Context Management

Focus: Managing conversation history and context for agents.

Tools: Letta, mem0, zep, chroma.

Level 6: Data Ingestion & Extraction

Focus: Getting data into a usable format, often for embedding and storage.

Tools: Scrapy, Beautiful Soup, Apache Tika.

Level 5: Embedding Models

Focus: Transforming data (text, images, etc.) into numerical vectors.

Tools: OpenAI, spacy, cohere, Hugging Face.

Level 4: Vector Databases

Focus: Storing and indexing the numerical vectors for fast retrieval.

Tools: Chroma, Pinecone, Milvus, Redis, pgvector.

Level 3: Orchestration Frameworks

Focus: Managing the workflow and logic between the different components (retrieval, generation, memory).

Tools: LangChain, DSPy, Haystack, LiteLLM.

Level 2: Foundation Models

Focus: The core Large Language Models (LLMs) used for generation.

Tools: Gemini 2.5 Pro, Mistral AI, Claude 3, LLaMA 4. Deepseek,

Level 1: Evaluation & Monitoring

Focus: Testing model performance, identifying bias, and tracking usage.

Tools: LangSmith, mflow, aragas, Fairlearn, Holistic AI.

Level 0: Deployment & Infrastructure

Focus: The platforms and services used to host and run the entire stack.

Tools: Groq, together.ai, Modal, Replicate.

At the core of the stack lies the essential grounding mechanism. This begins with Level 2: Foundation Models (e.g., Gemini 2.5 Pro, Claude), which are large neural networks that provide the core reasoning capability. Crucially, these models are made current and domain-specific by integrating with Level 5: Embedding Models and Level 4: Vector Databases (like Pinecone or Chroma). The Embedding Models transform proprietary or external data into numerical vectors, which the Vector Databases store and index for rapid, semantic similarity search. This integration is the essence of RAG, ensuring the LLM is factually grounded in verifiable information, mitigating the pervasive problem of hallucination.

Building upon this grounded core is the intelligence and control layer, which is critical for agentic behaviour. Level 3: Orchestration Frameworks (such as LangChain or DSPy) serve as the central nervous system, defining the sequence of actions—deciding when to search the vector database, when to call an external tool, or when to generate a response. This orchestration requires clean and relevant data, handled by Level 6: Data Ingestion & Extraction tools (like Apache Tika), and a persistent working memory, provided by Level 7: Memory & Context Management. These memory systems are crucial for maintaining conversational coherence, enabling agents to maintain state and engage in multi-step planning and decision-making.

Finally, the integrity and viability of the entire system are determined by the MLOps and regulatory layers at the bottom and top of the stack. Level 0: Deployment & Infrastructure ensures apparatus as a whole—from the Vector Database to the LLM endpoints—is hosted efficiently and scalably. More critical for production are Levels 1: Evaluation & Monitoring (e.g., LangSmith, Weights & Biases), which continuously measure metrics such as retrieval accuracy and output fairness, and Level 8: Safety & Governance. This top layer, utilizing tools like Guardrails AI, enforces guardrails against harmful or non-compliant outputs, transforming a powerful but unconstrained model into a compliant, enterprise-grade asset.

Ultimately, the Agentic RAG Tech Stack signifies the end of the "model-only" era in AI development. The nine essential levels, working in concert—from the factual grounding of RAG (Levels 4 and 5) to the autonomous control of Orchestration (Level 3) and the ethical mandates of Governance (Level 8)—demonstrate that power alone is insufficient. Actual impact requires reliability, verifiability, and oversight. This sophisticated architecture has transformed the Large Language Model from a powerful oracle into a trustworthy, accountable team member, paving the way for the age of autonomous agents that can be safely and effectively deployed across every industry.

Keywords: Agentic AI, Generative AI, Open Source

The Architectures of Permanence: A Comparative Analysis of the "Big Three" AI Strategies (2026)

The Architectures of Permanence: A Comparative Analysis of the "Big Three" AI Strategies (2026) Friday’s Change Reflection Quote - Leadership of Change - Change Leaders Enable Generational Advancement

Friday’s Change Reflection Quote - Leadership of Change - Change Leaders Enable Generational Advancement The Corix Partners Friday Reading List - February 27, 2026

The Corix Partners Friday Reading List - February 27, 2026 What Leaders Should Be Losing Sleep Over (But Aren’t)

What Leaders Should Be Losing Sleep Over (But Aren’t) Energy System Resilience: Lessons Europe Must Learn from Ukraine

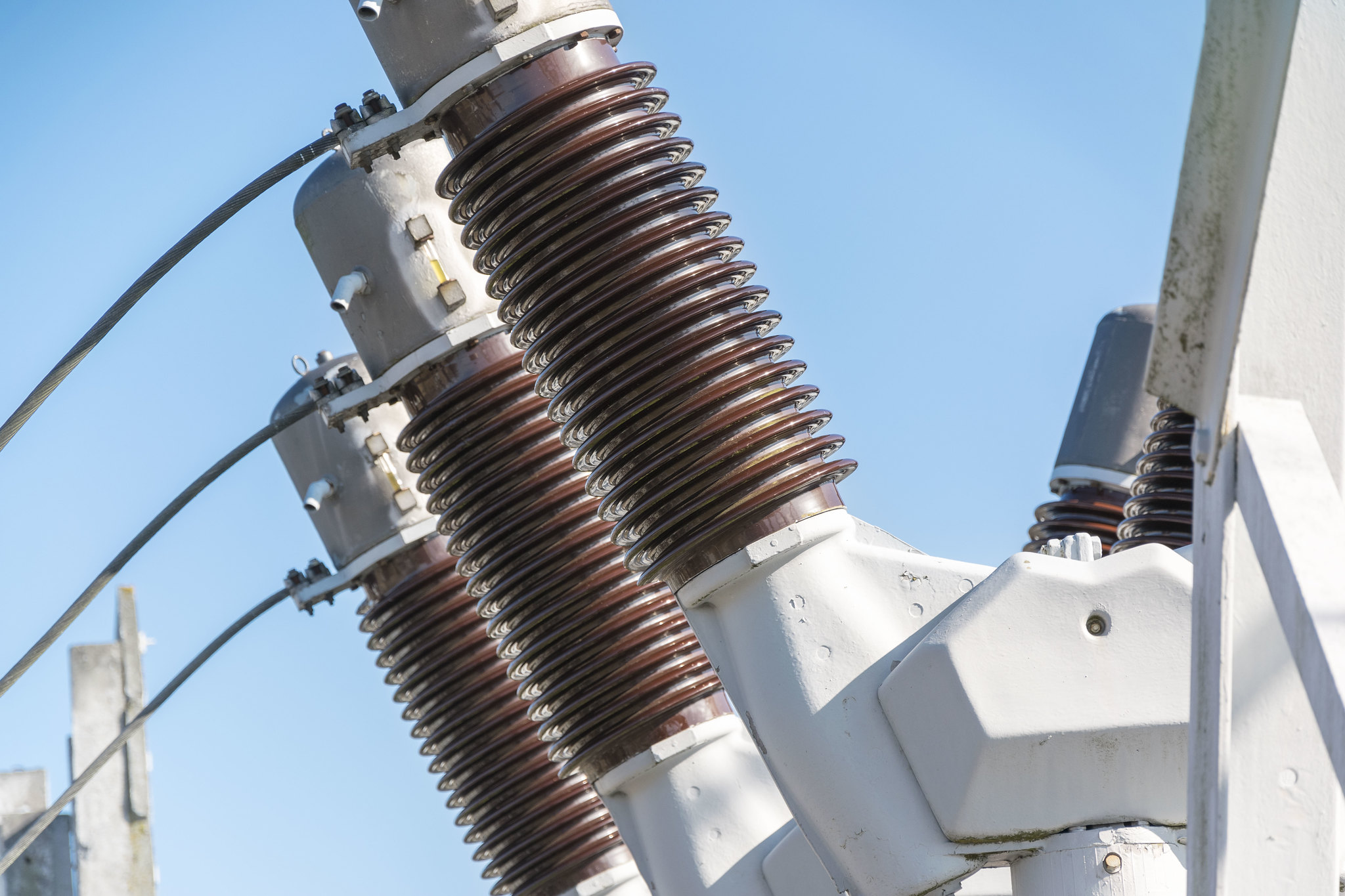

Energy System Resilience: Lessons Europe Must Learn from Ukraine