Dec03

The artificial intelligence industry is currently defined by a hyper-competitive trilemma, where advances in model capability, infrastructure efficiency, and commercial viability interact to accelerate the path toward Artificial General Intelligence (AGI). The recent confluence of OpenAI’s internal “code red,” Amazon’s launch of the cost-disruptive Trainium3 chip, and Mistral AI’s release of the open-source, multimodal Mistral Large 3 model reveals that the race is no longer simply about building the biggest model, but about forging the modular, efficient, and reliable ecosystem required to deploy truly autonomous, agentic systems. This intense competitive pressure is forcing the industry to focus on the essential building blocks—efficiency and modularity—that must be solved before AGI can be realized.

The development of sophisticated Agentic AI—systems capable of autonomous planning, tool use, and long-term goal execution—is fundamentally dependent on model capability, a domain that Mistral AI’s latest release has significantly advanced. The Mistral Large 3 model, with its Sparse Mixture-of-Experts (MoE) architecture (featuring 41 billion active parameters in a forward pass from a 675 billion total pool), large 256K context window, and native multimodal (vision) and multilingual support across 40+ languages, provides the foundational intelligence required for multi-step tasks. Its instruction-tuned version has achieved parity with the strongest closed models and ranked #2 among open-source non-reasoning models, signalling world-class performance. Crucially, its Apache 2.0 open-source, permissive license democratizes access to this frontier capability, moving the development of advanced agents out of a few proprietary labs and into the broader developer community. Agentic systems thrive on tool use and structured outputs (like JSON); by baking superior function-calling capabilities into an efficient MoE model, Mistral delivers the intelligence at scale needed for complex decision-making while maintaining high operational efficiency. This innovation, complemented by the compact Ministral 3 family for edge deployment, is critical to AGI, as it is widely predicted to manifest not as a single monolithic model but as a network of highly specialized, interacting agents.

However, complex agent networks require massive, continuous computational power, making the economics of AI infrastructure the second, indispensable driver. Amazon’s announcement of the Trainium3 chip, promising up to 50% lower training and operating costs compared to existing GPUs, addresses the core financial obstacle to large-scale AI deployment. Built on 3-nanometer technology, the Trn3 UltraServers deliver over 4 times (4.4x) the compute performance and 40% greater energy efficiency than their predecessor, scaling up to a massive 144 chips per system. This performance, already being leveraged by key rivals like Anthropic for production workloads, makes the cost of AI development and inference radically cheaper. A single complex agent executing hundreds of intermediate thoughts, API calls, and long-range planning steps generates dramatically more inference usage than a simple, single-query chatbot. If AGI is to be built from thousands of simultaneously running agents, the cost of running those agents must approach zero. Trainium3, alongside Google's TPU efforts, challenges Nvidia's market dominance by creating a much-needed environment of cost competition. Most significantly, Amazon's strategic decision to have Trainium4 support Nvidia's NVLink Fusion interconnect technology is a pragmatic hedge, offering enterprises a path to diversify their hardware reliance without abandoning the dominant CUDA ecosystem entirely. The infrastructure war is, therefore, a quiet but profound accelerator of AGI’s deployment potential.

Finally, the competitive crisis at OpenAI highlights the essential need for core product reliability and usability—qualities that must precede any attempt to deploy AGI. Sam Altman's "code red" directive, redirecting resources away from new revenue initiatives (like shopping agents and ads) to focus entirely on improving ChatGPT's speed, reliability, and personalization, signals a crucial maturation in the industry. For Agentic AI to function in the real world (e.g., managing a budget or scheduling complex events), they cannot be slow, unreliable, or prone to catastrophic failure. An autonomous agent must be fundamentally trustworthy. The focus on improved personalization is also key, as AGI systems must be capable of maintaining long-term state, learning from cumulative interactions, and adapting their persona and output to individual users—a core requirement for any truly general intelligence. This "code red" is thus less a retreat and more a tactical prioritization of the stability and trust layers upon which any ambitious AGI project must be built.

In conclusion, the current landscape—marked by competitive urgency (OpenAI), infrastructure efficiency (Trainium3), and open innovation (Mistral Large 3)—is rapidly establishing the prerequisites for AGI. The fight for market share is driving down computational costs and forcing the development of specialized, efficient, and reliable models well-suited for agentic deployment. A single, sudden breakthrough in research may not define the path to AGI. Still, the gradual, competitive convergence of cost-effective, modular, and multimodal agentic building blocks is now being created and scaled at an unprecedented rate across the entire technology stack.

Keywords: Agentic AI, Generative AI, Open Source

The Architectures of Permanence: A Comparative Analysis of the "Big Three" AI Strategies (2026)

The Architectures of Permanence: A Comparative Analysis of the "Big Three" AI Strategies (2026) Friday’s Change Reflection Quote - Leadership of Change - Change Leaders Enable Generational Advancement

Friday’s Change Reflection Quote - Leadership of Change - Change Leaders Enable Generational Advancement The Corix Partners Friday Reading List - February 27, 2026

The Corix Partners Friday Reading List - February 27, 2026 What Leaders Should Be Losing Sleep Over (But Aren’t)

What Leaders Should Be Losing Sleep Over (But Aren’t) Energy System Resilience: Lessons Europe Must Learn from Ukraine

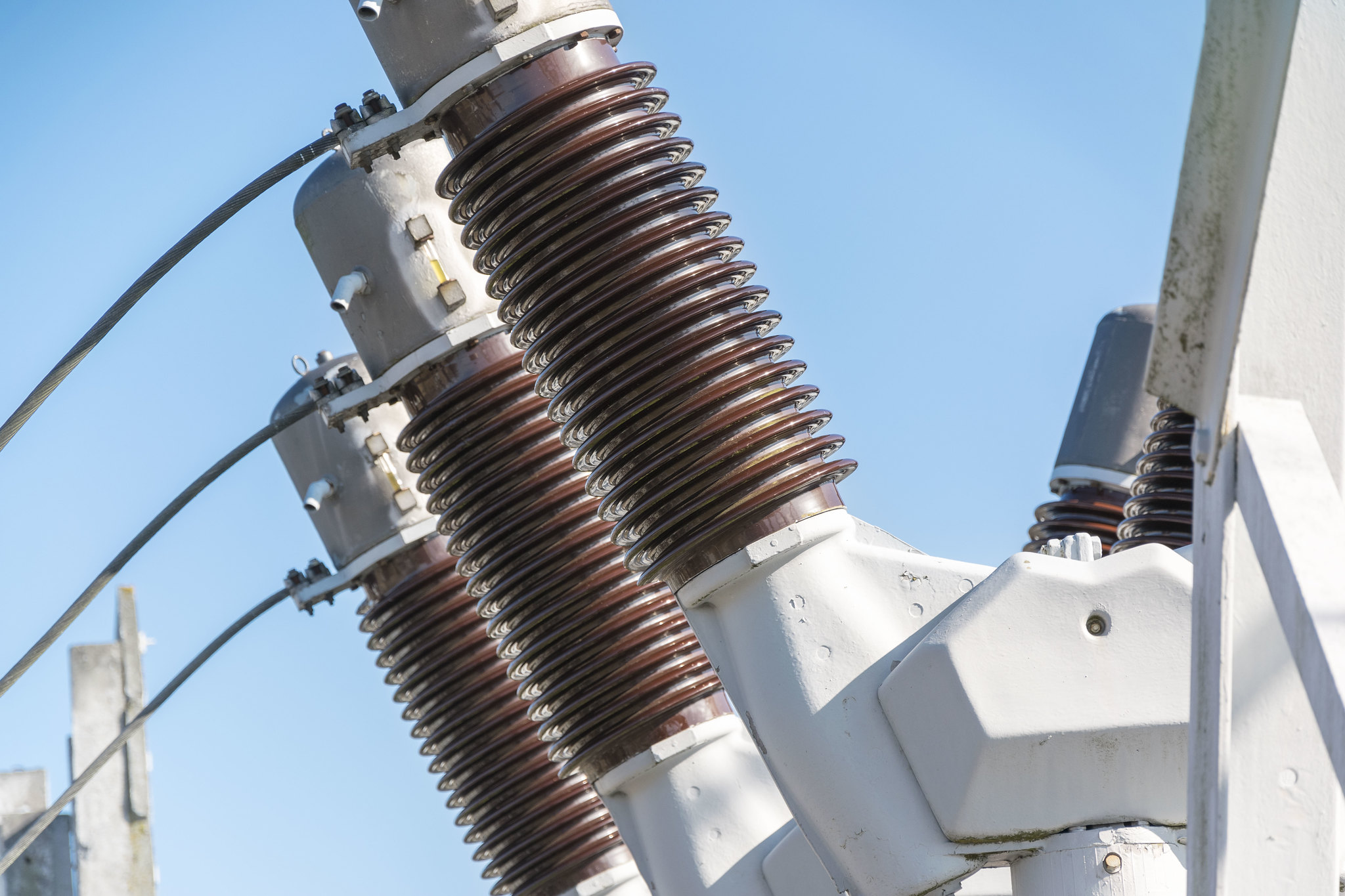

Energy System Resilience: Lessons Europe Must Learn from Ukraine